Kevin Cuzner's Personal Blog

Electronics, Embedded Systems, and Software are my breakfast, lunch, and dinner.

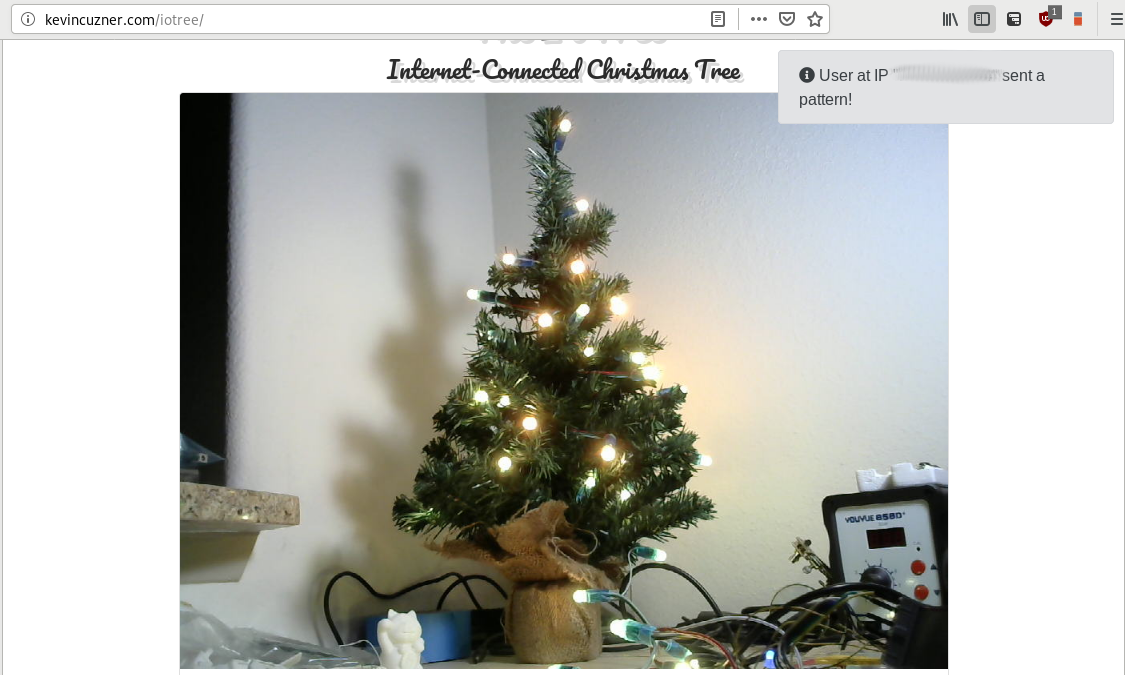

The IoTree: An internet-connected tree

For this Christmas I decided to do something fun with my Christmas tree: Hook it up to the internet. Visit the IoTree here (available through the 1st week of January 2019):

The complete source can be found here:

http://github.com/kcuzner/iotree

The IoTree is an interface that allows anyone to control the pattern of lights shown on the small Christmas tree on my workbench. It consists of the following components:

- My Kinetis KL26 breakout board (http://github.com/kcuzner/kl2-dev). This controls a string of 50 WS2811 LEDs which are zip-tied to the tree.

- A Raspberry Pi (mid-2012 vintage) which parses pattern commands coming from the cloud into LED sequences which are downloaded to the KL26 over SPI. It also hosts a webcam and periodically throws the image back up to the cloud so that the masses can see the results of their labors.

- A cloud server which hosts a Redis instance and python application to facilitate the user interface and communication down to the Raspberry Pi.

I'm going to go into brief detail about each of these pieces and some of the challenges I had with getting everything to work.

Building a USB bootloader for an STM32

As my final installment for the posts about my LED Wristwatch project I wanted to write about the self-programming bootloader I made for an STM32L052 and describe how it works. So far it has shown itself to be fairly robust and I haven't had to get out my STLink to reprogram the watch for quite some time.

The main object of this bootloader is to facilitate reprogramming of the device without requiring a external programmer. There are two ways that a microcontroller can accomplish this generally:

- Include a binary image in every compiled program that is copied into RAM and runs a bootloader program that allows for self-reprogramming.

- Reserve a section of flash for a bootloader that can reprogram the rest of flash.

Each of these ways has their pros and cons. Option 1 allows for the user program to use all available flash (aside from the blob size and bootstrapping code). It also might not require a relocatable interrupt vector table (something that some ARM Cortex microcontrollers lack). However, it also means that there is no recovery without using JTAG or SWD to reflash the microcontroller if you somehow mess up the switchover into the bootloader. Option 2 allows for a fairly fail-safe bootloader. The bootloader is always there, even if the user program is not working right. So long as the device provides a hardware method for entering bootloader mode, the device can always be recovered. However, Option 2 is difficult to update (you have to flash it with a special program that overwrites the bootloader), wastes unused space in the bootloader-reserved section, and also requires some features that not all microcontrollers have.

Because the STM32L052 has a large amount of flash (64K) and implements the vector-table-offset register (allowing the interrupt vector table to be relocated), I decided to go with Option 2. Example code for this post can be found here:

**https://github.com/kcuzner/led-watch**

Contents

Bare metal STM32: Writing a USB driver

A couple years ago I wrote a post about writing a bare metal USB driver for the Teensy 3.1, which uses Freescale Kinetis K20 microcontroller. Over the past couple years I've switched over to instead using the STM32 series of microcontrollers since they are cheaper to program the "right" way (the dirt-cheap STLink v2 enables that). I almost always prefer to use the microcontroller IC by itself, rather than building around a development kit since I find that to be much more interesting.

One of my recent (or not so recent) projects was an LED Wristwatch which utilized an STM32L052. This microcontroller is optimized for low power, but contains a USB peripheral which I used for talking to the wristwatch from my PC, both for setting the time and for reflashing the firmware. This was one of my first hobby projects where I designed something without any prior breadboarding (beyond the battery charger circuit). The USB and such was all rather "cross your fingers and hope it works" and it just so happened to work without a problem.

In this post I'm going to only cover a small portion of what I learned from the USB portion of the watch. There will be a further followup on making the watch show up as a HID Device and writing a USB bootloader.

Example code for this post can be found here:

**https://github.com/kcuzner/led-watch**

(mainly in common/src/usb.c and common/include/usb.h)

My objective here is to walk quickly through the operation of the USB Peripheral, specifically the Packet Memory Area, then talk a bit about how the USB Peripheral does transfers, and move on to how I structured my code to abstract the USB packetizing logic away from the application.

Arranging components in a circle with Kicad

I've been using kicad for just about all of my designs for a little over 5 years now. It took a little bit of a learning curve, but I've really come to love it, especially with the improvements by CERN that came out in version 4. One of the greatest features, in my opinion, is the Python Scripting Console in the PCB editor (pcbnew). It gives (more or less) complete access to the design hierarchy so that things like footprints can be manipulated in a scripted fashion.

In my most recent design, the LED Watch, I used this to script myself a tool for arranging footprints in a circle. What I want to show today was how I did it and how to use it so that you can make your own scripting tools (or just arrange stuff in a circle).

*The python console can be found in pcbnew under Tools->Scripting Console. *

Step 1: Write the script

When writing a script for pcbnew, it is usually helpful to have some documentation. Some can be found here, though I mostly used "dir" a whole bunch and had it print me the structure of the various things once I found the points to hook in. The documentation is fairly spartan at this point, so that made things easier.

Here's my script:

1#!/usr/bin/env python2

2

3# Random placement helpers because I'm tired of using spreadsheets for doing this

4#

5# Kevin Cuzner

6

7import math

8from pcbnew import *

9

10def place_circle(refdes, start_angle, center, radius, component_offset=0, hide_ref=True, lock=False):

11 """

12 Places components in a circle

13 refdes: List of component references

14 start_angle: Starting angle

15 center: Tuple of (x, y) mils of circle center

16 radius: Radius of the circle in mils

17 component_offset: Offset in degrees for each component to add to angle

18 hide_ref: Hides the reference if true, leaves it be if None

19 lock: Locks the footprint if true

20 """

21 pcb = GetBoard()

22 deg_per_idx = 360 / len(refdes)

23 for idx, rd in enumerate(refdes):

24 part = pcb.FindModuleByReference(rd)

25 angle = (deg_per_idx * idx + start_angle) % 360;

26 print "{0}: {1}".format(rd, angle)

27 xmils = center[0] + math.cos(math.radians(angle)) * radius

28 ymils = center[1] + math.sin(math.radians(angle)) * radius

29 part.SetPosition(wxPoint(FromMils(xmils), FromMils(ymils)))

30 part.SetOrientation(angle * -10)

31 if hide_ref is not None:

32 part.Reference().SetVisible(not hide_ref)

33 print "Placement finished. Press F11 to refresh."

There are several arguments to this function: a list of reference designators (["D1", "D2", "D3"] etc), the angle at which the first component should be placed, the position in mils for the center of the circle, and the radius of the circle in mils. Once the function is invoked, it will find all of the components indicated in the reference designator list and arrange them into the desired circle.

Step 2: Save the script

In order to make life easier, it is best if the script is saved somewhere that the pcbnew python interpreter knows where to look. I found a good location at "/usr/share/kicad/scripting/plugins", but the list of all paths that will be searched can be easily found by opening the python console and executing "import sys" followed by "print(sys.path)". Pick a path that makes sense and save your script there. I saved mine as "placement_helpers.py" since I intend to add more functions to it as situations require.

Step 3: Open your PCB and run the script

Before you can use the scripts on your footprints, they need to be imported. Make sure you execute the "Read Netlist" command before continuing.

The scripting console can be found under Tools->Scripting Console. Once it is opened you will see a standard python (2) command prompt. If you placed your script in a location where the Scripting Console will search, you should be able to do something like the following:

1PyCrust 0.9.8 - KiCAD Python Shell

2Python 2.7.13 (default, Feb 11 2017, 12:22:40)

3[GCC 6.3.1 20170109] on linux2

4Type "help", "copyright", "credits" or "license" for more information.

5>>> import placement_helpers

6>>> placement_helpers.place_circle(["D1", "D2"], 0, (500, 500), 1000)

7D1: 0

8D2: 180

9Placement finished. Press F11 to refresh.

10>>>

Now, pcbnew may not recognize that your PCB has changed and enable the save button. You should do something like lay a trace or some other board modification so that you can save any changes the script made. I'm sure there's a way to trigger this in Python, but I haven't got around to trying it yet.

Conclusion

Hopefully this brief tutorial will either help you to place components in circles in Kicad/pcbnew or will help you to write your own scripts for easing PCB layout. Kicad can be a very capable tool and with its new expanded scripting functionality, the sky seems to be the limit.

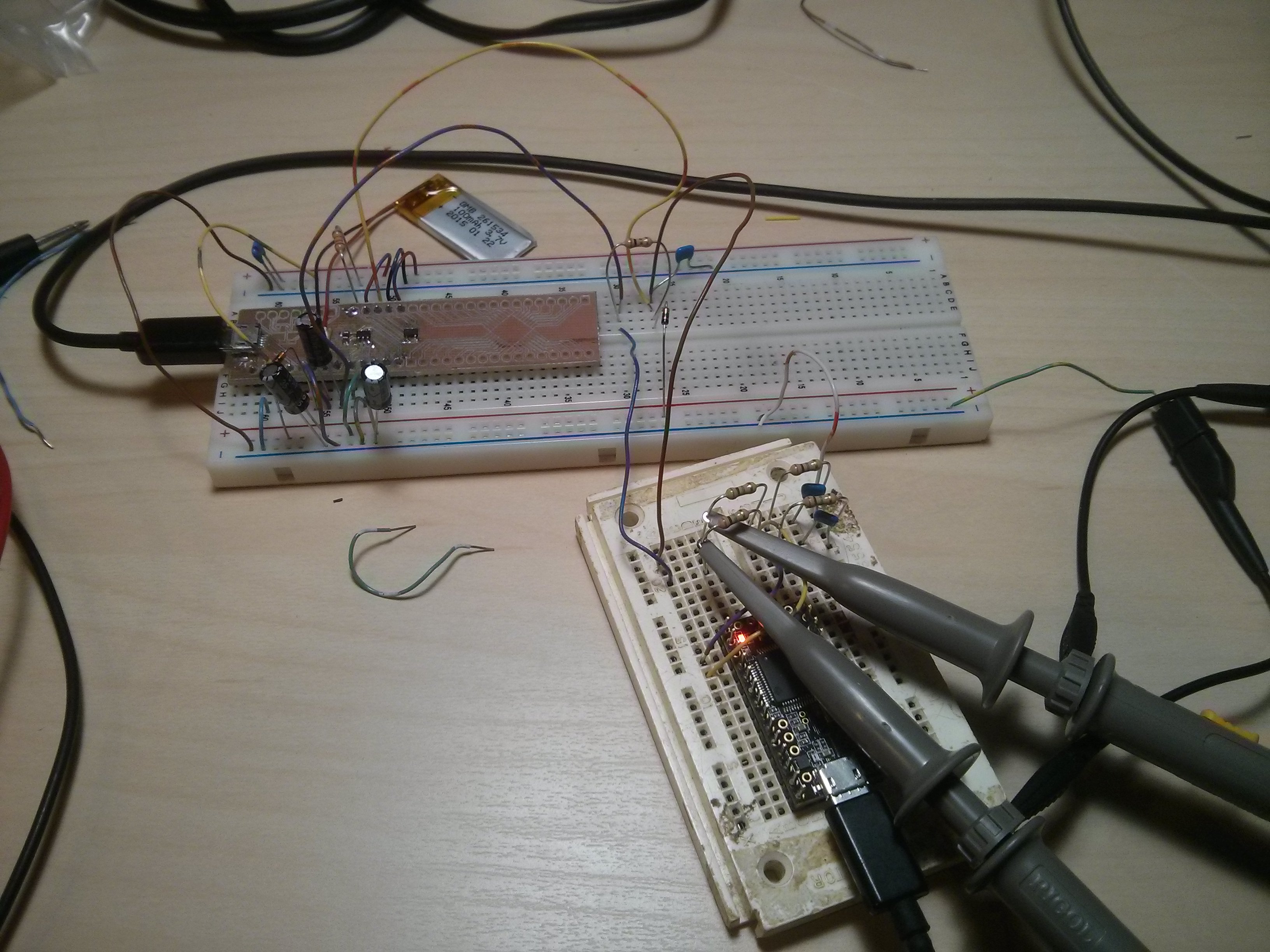

Quick-n-dirty data acquisition with a Teensy 3.1

The Problem

I am working on a project that involves a Li-Ion battery charger. I've never built one of these circuits before and I wanted to test the battery over its entire charge-discharge cycle to make sure it wasn't going to burst into flame because I set some resistor wrong. The battery itself is very tiny (100mAH, 2.5mm thick) and is going to be powering an extremely low-power circuit, hopefully over the course of many weeks between charges.

After about 2 days of taking meter measurements every 6 hours or so to see what the voltage level had dropped to, I decided to try to automate this process. I had my trusty Teensy 3.1 lying around, so I thought that it should be pretty simple to turn it into a simple data logger, measuring the voltage at a very slow rate (maybe 1 measurement per 5 seconds). Thus was born the EZDAQ.

All code for this project is located in the repository at `https://github.com/kcuzner/ezdaq <https://github.com/kcuzner/ezdaq>`__

Setting up the Teensy 3.1 ADC

I've never used the ADC before on the Teensy 3.1. I don't use the Teensy Cores HAL/Arduino Library because I find it more fun to twiddle the bits and write the makefiles myself. Of course, this also means that I don't always get a project working within 30 minutes.

The ADC on the Teensy 3.1 (or the Kinetis MK20DX256) is capable of doing 16-bit conversions at 400-ish ksps. It is also quite complex and can do conversions in many different ways. It is one of the larger and more configurable peripherals on the device, probably rivaled only by the USB module. The module does not come pre-calibrated and requires a calibration cycle to be performed before its accuracy will match that specified in the datasheet. My initialization code is as follows:

1//Enable ADC0 module

2SIM_SCGC6 |= SIM_SCGC6_ADC0_MASK;

3

4//Set up conversion precision and clock speed for calibration

5ADC0_CFG1 = ADC_CFG1_MODE(0x1) | ADC_CFG1_ADIV(0x1) | ADC_CFG1_ADICLK(0x3); //12 bit conversion, adc async clock, div by 2 (<3MHz)

6ADC0_CFG2 = ADC_CFG2_ADACKEN_MASK; //enable async clock

7

8//Enable hardware averaging and set up for calibration

9ADC0_SC3 = ADC_SC3_CAL_MASK | ADC_SC3_AVGS(0x3);

10while (ADC0_SC3 & ADC_SC3_CAL_MASK) { }

11if (ADC0_SC3 & ADC_SC3_CALF_MASK) //calibration failed. Quit now while we're ahead.

12 return;

13temp = ADC0_CLP0 + ADC0_CLP1 + ADC0_CLP2 + ADC0_CLP3 + ADC0_CLP4 + ADC0_CLPS;

14temp /= 2;

15temp |= 0x1000;

16ADC0_PG = temp;

17temp = ADC0_CLM0 + ADC0_CLM1 + ADC0_CLM2 + ADC0_CLM3 + ADC0_CLM4 + ADC0_CLMS;

18temp /= 2;

19temp |= 0x1000;

20ADC0_MG = temp;

21

22//Set clock speed for measurements (no division)

23ADC0_CFG1 = ADC_CFG1_MODE(0x1) | ADC_CFG1_ADICLK(0x3); //12 bit conversion, adc async clock, no divide

Following the recommendations in the datasheet, I selected a clock that would bring the ADC clock speed down to <4MHz and turned on hardware averaging before starting the calibration. The calibration is initiated by setting a flag in ADC0_SC3 and when completed, the calibration results will be found in the several ADC0_CL* registers. I'm not 100% certain how this calibration works, but I believe what it is doing is computing some values which will trim some value in the SAR logic (probably something in the internal DAC) in order to shift the converted values into spec.

One thing to note is that I did not end up using the 16-bit conversion capability. I was a little rushed and was put off by the fact that I could not get it to use the full 0-65535 dynamic range of a 16-bit result variable. It was more like 0-10000. This made figuring out my "volts-per-value" value a little difficult. However, the 12-bit mode gave me 0-4095 with no problems whatsoever. Perhaps I'll read a little further and figure out what is wrong with the way I was doing the 16-bit conversions, but for now 12 bits is more than sufficient. I'm just measuring some voltages.

Since I planned to measure the voltages coming off a Li-Ion battery, I needed to make sure I could handle the range of 3.0V-4.2V. Most of this is outside the Teensy's ADC range (max is 3.3V), so I had to make myself a resistor divider attenuator (with a parallel capacitor for added stability). It might have been better to use some sort of active circuit, but this is supposed to be a quick and dirty DAQ. I'll talk a little more about handling issues spawning from the use of this resistor divider in the section about the host software.

Quick and dirty USB device-side driver

For this project I used my device-side USB driver software that I wrote in this project. Since we are gathering data quite slowly, I figured that a simple control transfer should be enough to handle the requisite bandwidth.

1static uint8_t tx_buffer[256];

2

3/**

4 * Endpoint 0 setup handler

5 */

6static void usb_endp0_handle_setup(setup_t* packet)

7{

8 const descriptor_entry_t* entry;

9 const uint8_t* data = NULL;

10 uint8_t data_length = 0;

11 uint32_t size = 0;

12 uint16_t *arryBuf = (uint16_t*)tx_buffer;

13 uint8_t i = 0;

14

15 switch(packet->wRequestAndType)

16 {

17...USB Protocol Stuff...

18 case 0x01c0: //get adc channel value (wIndex)

19 *((uint16_t*)tx_buffer) = adc_get_value(packet->wIndex);

20 data = tx_buffer;

21 data_length = 2;

22 break;

23 default:

24 goto stall;

25 }

26

27 //if we are sent here, we need to send some data

28 send:

29...Send Logic...

30

31 //if we make it here, we are not able to send data and have stalled

32 stall:

33...Stall logic...

34}

I added a control request (0x01) which uses the wIndex (not to be confused with the cleaning product) value to select a channel to read. The host software can now issue a vendor control request 0x01, setting the wIndex value accordingly, and get the raw value last read from a particular analog channel. In order to keep things easy, I labeled the analog channels using the same format as the standard Teensy 3.1 layout. Thus, wIndex 0 corresponds to A0, wIndex 1 corresponds to A1, and so forth. The adc_get_value function reads the last read ADC value for a particular channel. Sampling is done by the ADC continuously, so the USB read doesn't initiate a conversion or anything like that. It just reads what happened on the channel during the most recent conversion.

Host software

Since libusb is easy to use with Python, via PyUSB, I decided to write out the whole thing in Python. Originally I planned on some sort of fancy gui until I realized that it would far simpler just to output a CSV and use MATLAB or Excel to process the data. The software is simple enough that I can just put the entire thing here:

1#!/usr/bin/env python3

2

3# Python Host for EZDAQ

4# Kevin Cuzner

5#

6# Requires PyUSB

7

8import usb.core, usb.util

9import argparse, time, struct

10

11idVendor = 0x16c0

12idProduct = 0x05dc

13sManufacturer = 'kevincuzner.com'

14sProduct = 'EZDAQ'

15

16VOLTS_PER = 3.3/4096 # 3.3V reference is being used

17

18def find_device():

19 for dev in usb.core.find(find_all=True, idVendor=idVendor, idProduct=idProduct):

20 if usb.util.get_string(dev, dev.iManufacturer) == sManufacturer and \

21 usb.util.get_string(dev, dev.iProduct) == sProduct:

22 return dev

23

24def get_value(dev, channel):

25 rt = usb.util.build_request_type(usb.util.CTRL_IN, usb.util.CTRL_TYPE_VENDOR, usb.util.CTRL_RECIPIENT_DEVICE)

26 raw_data = dev.ctrl_transfer(rt, 0x01, wIndex=channel, data_or_wLength=256)

27 data = struct.unpack('H', raw_data)

28 return data[0] * VOLTS_PER;

29

30def get_values(dev, channels):

31 return [get_value(dev, ch) for ch in channels]

32

33def main():

34 # Parse arguments

35 parser = argparse.ArgumentParser(description='EZDAQ host software writing values to stdout in CSV format')

36 parser.add_argument('-t', '--time', help='Set time between samples', type=float, default=0.5)

37 parser.add_argument('-a', '--attenuation', help='Set channel attentuation level', type=float, nargs=2, default=[], action='append', metavar=('CHANNEL', 'ATTENUATION'))

38 parser.add_argument('channels', help='Channel number to record', type=int, nargs='+', choices=range(0, 10))

39 args = parser.parse_args()

40

41 # Set up attentuation dictionary

42 att = args.attenuation if len(args.attenuation) else [[ch, 1] for ch in args.channels]

43 att = dict([(l[0], l[1]) for l in att])

44 for ch in args.channels:

45 if ch not in att:

46 att[ch] = 1

47

48 # Perform data logging

49 dev = find_device()

50 if dev is None:

51 raise ValueError('No EZDAQ Found')

52 dev.set_configuration()

53 print(','.join(['Time']+['Channel ' + str(ch) for ch in args.channels]))

54 while True:

55 values = get_values(dev, args.channels)

56 print(','.join([str(time.time())] + [str(v[1] * (1/att[v[0]])) for v in zip(args.channels, values)]))

57 time.sleep(args.time)

58

59if __name__ == '__main__':

60 main()

Basically, I just use the argparse module to take some command line inputs, find the device using PyUSB, and spit out the requested channel values in a CSV format to stdout every so often.

In addition to simply displaying the data, the program also processes the raw ADC values into some useful voltage values. I contemplated doing this on the device, but it was simpler to configure if I didn't have to reflash it every time I wanted to make an adjustment. One thing this lets me do is a sort of calibration using the "attenuation" values that I put into the host. The idea with these values is to compensate for a voltage divider in front of the analog input in order so that I can measure higher voltages, even though the Teensy 3.1 only supports voltages up to 3.3V.

For example, if I plugged my 50%-ish resistor divider on channel A0 into 3.3V, I would run the following command:

1$ ./ezdaq 0

2Time,Channel 0

31467771464.9665403,1.7990478515625

4...

We now have 1.799 for the "voltage" seen at the pin with an attenuation factor of 1. If we divide 1.799 by 3.3 we get 0.545 for our attenuation value. Now we run the following to get our newly calibrated value:

1$ ./ezdaq -a 0 0.545 0

2Time,Channel 0

31467771571.2447994,3.301005232

4...

This process highlights an issue with using standard resistors. Unless the resistors are precision resistors, the values will not ever really match up very well. I used 4 1meg resistors to make two voltage dividers. One of them had about a 46% division and the other was close to 48%. Sure, those seem close, but in this circuit I needed to be accurate to at least 50mV. The difference between 46% and 48% is enough to throw this off. So, when doing something like this with trying to derive an input voltage after using an imprecise voltage divider, some form of calibration is definitely needed.

Conclusion

After hooking everything up and getting everything to run, it was fairly simple for me to take some two-channel measurements:

1$ ./ezdaq -t 5 -a 0 0.465 -a 1 0.477 0 1 > ~/Projects/AVR/the-project/test/charge.csv

This will dump the output of my program into the charge.csv file (which is measuring the charge cycle on the battery). I will get samples every 5 seconds. Later, I can use this data to make sure my circuit is working properly and observe its behavior over long periods of time. While crude, this quick and dirty DAQ solution works quite well for my purposes.

Dev boards? Where we're going we won't need dev boards...

A complete tutorial for using an STM32 without a dev board

Introduction

About two years ago I started working with the Teensy 3.1 (which uses a Freescale Kinetis ARM-Cortex microcontroller) and I was super impressed with the ARM processor, both for its power and relative simplicity (it is not simple...its just relatively simple for the amount of power you get for the cost IMO). Almost all my projects before that point had consisted of AVRs and PICs (I'm in the AVR camp now), but now ARM-based microcontrollers had become serious contenders for something that I could go to instead. I soon began working on a small development board project also involving some Freescale Kinetis microcontrollers since those are what I have become the most familiar with. Sadly, I have had little success since I have been trying to make a programmer myself (the official one is a minimum of $200). During the course of this project I came across a LOT of STM32 stuff and it seemed that it was actually quite easy to set up. Lots of the projects used the STM32 Discovery and similar dev boards, which are a great tools and provide an easy introduction to ARM microcontrollers. However, my interest is more towards doing very bare metal development. Like soldering the chip to a board and hooking it up to a programmer. Who needs any of that dev board stuff? For some reason I just find doing embedded development without a development board absolutely fascinating. Some people might interpret doing things this way as a form of masochism. Perhaps I should start seeing a doctor...

Having seen how common the STM32 family was (both in dev boards and in commercial products) and noting that they were similarly priced to the Freescale Kinetis series, I looked in to exactly what I would need to program these, saw that the stuff was cheap, and bought it. After receiving my parts and soldering everything up, I plugged everything into my computer and had a program running on the STM32 in a matter of hours. Contrast that to a year spent trying to program a Kinetis KL26 with only partial success.

This post is a complete step-by-step tutorial on getting an STM32 microcontroller up and running without using a single dev board (breakout boards don't count as dev boards for the purposes of this tutorial). I'm writing this because I could not find a standalone tutorial for doing this with an ARM microcontroller and I ended up having to piece it together bit by bit with a lot of googling. My objective is to walk you through the process of purchasing the appropriate parts, writing a small program, and uploading it to the microcontroller.

I make the following assumptions:

- The reader is familiar with electronics and soldering.

- The reader is familiar with flash-based microcontrollers in general (PIC, AVR, ARM, etc) and has programmed a few using a separate standalone programmer before.

- The reader knows how to read a datasheet.

- The reader knows C and is at least passingly familiar with the overall embedded build process of compilation-linking-flashing.

- The reader knows about makefiles.

- The reader is ridiculously excited about ARM microcontrollers and is strongly motivated to overlook any mistakes here and try this out for themselves (srsly tho...if you see a problem or have a suggestion, leave it in the comments. I really do appreciate feedback.)

All code, makefiles, and configuration stuff can be found in the project repository on github. Project Repository: `https://github.com/kcuzner/stm32f103c8-blink <https://github.com/kcuzner/stm32f103c8-blink>`__

Materials

You will require the following materials:

- A computer running Linux. If you run Windows only, please don't be dissuaded. I'm just lazy and don't want to test this for Windows. It may require some finagling. Manufacturer support is actually better for Windows since they provide some interesting configuration and programming software that is Windows only...but who needs that stuff anyway?

- A STLinkv2 Clone from eBay. Here's one very similar to the one I bought. ~$3

- Some STM32F103C8's from eBay. Try going with the TQFP-48 package. Why this microcontroller? Because for some reason it is all over the place on eBay. I suspect that the lot I bought (and all of the ones on eBay) is probably not authentic from ST. I hear that Chinese STM32 clones abound nowadays. I got 10 for $12.80.

- A breakout board for a TQFP-48 with 0.5mm pitch. Yes, you will need to solder surface mount. I found mine for $1. I'm sure you can find one for a similar price.

- 4x 0.1uF capacitors for decoupling. Mine are surface mount in the 0603 package. These will be soldered creatively to the breakout board between the power pins to provide some decoupling since we will probably have wires flying all over. I had mine lying around in a parts bin, left over from my development board project. Digikey is great for getting these, but I'm sure you could find them on eBay or Amazon as well.

- Some dupont wires for connecting the programmer to the STM32. You will need at least 4. These are the ones that are sold for Arduinos. These came with my programmer, but you may have some in your parts box. They are dang cheap on Amazon.

- Regular wires.

- An LED and a resistor.

I was able to acquire all of these parts for less than $20. Now, I did have stuff like the capacitors, led, resistor, and wires lying around in parts boxes, but those are quite cheap anyway.

Side note: Here is an excellent video by the EE guru Dave Jones on surface mount soldering if the prospect is less than palatable to you: https://www.youtube.com/watch?v=b9FC9fAlfQE

Step 1: Download the datasheets

Above we decided to use the STM32F103C8 ARM Cortex-M3 microcontroller in a TQFP-48 package. This microcontroller has so many peripherals its no wonder its the one all over eBay. I could see this microcontroller easily satisfying the requirements for all of my projects. Among other things it has:

- 64K flash, 20K RAM

- 72MHz capability with an internal PLL

- USB

- CAN

- I2C & SPI

- Lots of timers

- Lots of PWM

- Lots of GPIO

All this for ~$1.20/part no less! Of course, its like $6 on digikey, but for my purposes having an eBay-sourced part is just fine.

Ok, so when messing with any microcontroller we need to look at its datasheet to know where to plug stuff in. For almost all ARM Microcontrollers there will be no less than 2 datasheet-like documents you will need: The part datasheet and the family reference manual . The datasheet contains information such as the specific pinouts and electrical characteristics and the family reference manual contains the detailed information on how the microcontroller works (core and peripherals). These are both extremely important and will be indispensable for doing anything at all with one of these microcontrollers bare metal.

Find the STM32F103C8 datasheet and family reference manual here (datasheet is at the top of the page, reference manual is at the bottom): http://www.st.com/en/microcontrollers/stm32f103c8.html. They are also found in the "ref" folder of the repository.

Step 2: Figure out where to solder and do it

After getting the datasheet we need to solder the microcontroller down to the breakout board so that we can start working with it on a standard breadboard. If you prefer to go build your own PCB and all that (I usually do actually) then do that instead of this. However, you will still need to know which pins to hook up.

On the pin diagram posted here you will find the highlighted pins of interest for hooking this thing up. We need the following pins at a minimum:

- Shown in Red/Blue: All power pins, VDD, VSS, AVDD, and AVSS. There are four pairs: 3 for the VDD/VSS and one AVDD/AVSS. The AVDD/AVSS pair is specifically used to power the analog/mixed signal circuitry and is separate to give us the opportunity to perform some additional filtering on those lines and remove supply noise induced by all the switching going on inside the microcontroller; an opportunity I won't take for now.

- Shown in Yellow/Green: The SWD (Serial Wire Debug) pins. These are used to connect to the STLinkV2 programmer that you purchased earlier. These can be used for so much more than just programming (debugging complete with breakpoints, for a start), but for now we will just use it to talk to the flash on the microcontroller.

- Shown in Cyan: Two fun GPIOs to blink our LEDs with. I chose PB0 and PB1. You could choose others if you would like, but just make sure that they are actually GPIOs and not something unexpected.

Below you will find a picture of my breakout board. I soldered a couple extra pins since I want to experiment with USB.

Very important: You may notice that I have some little tiny capacitors (0.1uF) soldered between the power pins (the one on the top is the most visible in the picture). You need to mount your capacitors between each pair of VDD/VSS pins (including AVDD/AVSS) . How you do this is completely up to you, but it must be done and *they should be rather close to the microcontroller itself* . If you don't it is entirely possible that when the microcontroller first turns on and powers up (specifically at the first falling edge of the internal clock cycle), the inductance created by the flying power wires we have will create a voltage spike that will either cause a malfunction or damage. I've broken microcontrollers by forgetting the decoupling caps and I'm not eager to do it again.

Step 3: Connect the breadboard and programmer

Don't do this with the programmer plugged in.

On the right you will see my STLinkV2 clone which I will use for this project. Barely visible is the pinout. We will need the following pins connected from the programmer onto our breadboard. These come off the header on the non-USB end of the programmer. Pinouts may vary. Double check your programmer!

- 3.3V: We will be using the programmer to actually power the microcontroller since that is the simplest option. I believe this pin is Pin 7 on my header.

- GND: Obviously we need the ground. On mine this was Pin 4.

- SWDIO: This is the data for the SWD bus. Mine has this at Pin 2.

- SWCLK: This is the clock for the SWD bus. Mine has this at Pin 6.

You may notice in the above picture that I have an IDC cable coming off my programmer rather than the dupont wires. I borrowed the cable from my AVR USBASP programmer since it was more available at the time rather than finding the dupont cables that came with the STLinkV2.

Next, we need to connect the following pins on the breadboard:

- STM32 [A]VSS pins 8, 23, 35, and 47 connected to ground.

- STM32 [A]VDD pins 9, 24, 36, and 48 connected to 3.3V.

- STM32 pin 34 to SWDIO.

- STM32 pin 37 to SWCLK.

- STM32 PB0 pin 18 to a resistor connected to the anode of an LED. The cathode of the LED goes to ground. Pin 19 (PB1) can also be connected in a similar fashion if you should so choose.

Here is my breadboard setup:

Step 4: Download the STM32F1xx C headers

Project Repository: `https://github.com/kcuzner/stm32f103c8-blink <https://github.com/kcuzner/stm32f103c8-blink>`__

Since we are going to write a program, we need the headers. These are part of the STM32CubeF1 library found here.

Visit the page and download the STM32CubeF1 zip file. It will ask for an email address. If you really don't want to give them your email address, the necessary headers can be found in the project github repository.

Alternately, just clone the repository. You'll miss all the fun of poking around the zip file, but sometimes doing less work is better.

The STM32CubeF1 zip file contains several components which are designed to help people get started quickly when programming STM32s. This is one thing that ST definitely does better than Freescale. It was so difficult to find the headers for the Kinetis microcontrollers that almost gave up at that point. Anyway, inside the zip file we are only interested in the following:

- The contents of Drivers/CMSIS/Device/ST/STM32F1xx/Include. These headers contain the register definitions among other things which we will use in our program to reference the peripherals on the device.

- Drivers/CMSIS/Device/ST/STM32F1xx/Source/Templates/gcc/startup_stm32f103xb.s. This contains the assembly code used to initialize the microcontroller immediately after reset. We could easily write this ourselves, but why reinvent the wheel?

- Drivers/CMSIS/Device/ST/STM32F1xx/Source/Templates/system_stm32f1xx.c. This contains the common system startup routines referenced by the assembly file above.

- Drivers/CMSIS/Device/ST/STM32F1xx/Source/Templates/gcc/linker/STM32F103XB_FLASH.ld. This is the linker script for the next model up of the microcontroller we have (we just have to change the "128K" to a "64K" near the beginning of the file in the MEMORY section (line 43 in my file) and we are good to go). This is used to tell the linker where to put all the parts of the program inside the microcontroller's flash and RAM. Mine had a "0" on every blank line. If you see this in yours, delete those "0"s. They will cause errors.

- The contents of Drivers/CMSIS/Include. These are the core header files for the ARM Cortex-M3 and the definitions contained therein are used in all the other header files we reference.

I copied all the files referenced above to various places in my project structure so they could be compiled into the final program. Please visit the repository for the exact locations and such. My objective with this tutorial isn't really to talk too much about project structure, and so I think that's best left as an exercise for the reader.

Step 5: Install the required software

We need to be able to compile the program and flash the resulting binary file to the microcontroller. In order to do this, we will require the following programs to be installed:

- The arm-none-eabi toolchain. I use arch linux and had to install "arm-none-eabi-gcc". On Ubuntu this is called "gcc-arm-none-eabi". This is the cross-compiler for the ARM Cortex cores. The naming "none-eabi" comes from the fact that it is designed to compile for an environment where the program is the only thing running on the target processor. There is no underlying operating system talking to the application binary file (ABI = application binary interface, none-eabi = No ABI) in order to load it into memory and execute it. This means that it is ok with outputting raw binary executable programs. Contrast this with Linux which likes to use the ELF format (which is a part of an ABI specification) and the OS will interpret that file format and load the program from it.

- arm-none-eabi binutils. In Arch the package is "arm-none-eabi-binutils". In Ubuntu this is "binutils-arm-none-eabi". This contains some utilities such as "objdump" and "objcopy" which we use to convert the output ELF format into the raw binary format we will use for flashing the microcontroller.

- Make. We will be using a makefile, so obviously you will need make installed.

- OpenOCD. I'm using 0.9.0, which I believe is available for both Arch and Ubuntu. This is the program that we will use to talk to the STLinkV2 which in turn talks to the microcontroller. While we are just going to use it to flash the microcontroller, it can be also used for debugging a program on the processor using gdb.

Once you have installed all of the above programs, you should be good to go for ARM development. As for an editor or IDE, I use vim. You can use whatever. It doesn't matter really.

Step 6: Write and compile the program

Ok, so we need to write a program for this microcontroller. We are going to simply toggle on and off a GPIO pin (PB0). After reset, the processor uses the internal RC oscillator as its system clock and so it runs at a reasonable 8MHz or so I believe. There are a few steps that we need to go through in order to actually write to the GPIO, however:

- Enable the clock to PORTB. Most ARM microcontrollers, the STM32 included, have a clock gating system that actually turns off the clock to pretty much all peripherals after system reset. This is a power saving measure as it allows parts of the microcontroller to remain dormant and not consume power until needed. So, we need to turn on the GPIO port before we can use it.

- Set PB0 to a push-pull output. This microcontroller has many different options for the pins including analog input, an open-drain output, a push-pull output, and an alternate function (usually the output of a peripheral such as a timer PWM). We don't want to run our LED open drain for now (though we certainly could), so we choose the push-pull output. Most microcontrollers have push-pull as the default method for driving their outputs.

- Toggle the output state on. Once we get to this point, it's success! We can control the GPIO by just flipping a bit in a register.

- Toggle the output state off. Just like the previous step.

Here is my super-simple main program that does all of the above:

1/**

2 * STM32F103C8 Blink Demonstration

3 *

4 * Kevin Cuzner

5 */

6

7#include "stm32f1xx.h"

8

9int main(void)

10{

11 //Step 1: Enable the clock to PORT B

12 RCC->APB2ENR |= RCC_APB2ENR_IOPBEN;

13

14 //Step 2: Change PB0's mode to 0x3 (output) and cfg to 0x0 (push-pull)

15 GPIOB->CRL = GPIO_CRL_MODE0_0 | GPIO_CRL_MODE0_1;

16

17 while (1)

18 {

19 //Step 3: Set PB0 high

20 GPIOB->BSRR = GPIO_BSRR_BS0;

21 for (uint16_t i = 0; i != 0xffff; i++) { }

22 //Step 4: Reset PB0 low

23 GPIOB->BSRR = GPIO_BSRR_BR0;

24 for (uint16_t i = 0; i != 0xffff; i++) { }

25 }

26

27 return 0;

28}

If we turn to our trusty family reference manual, we will see that the clock gating functionality is located in the Reset and Clock Control (RCC) module (section 7 of the manual). The gates to the various peripherals are sorted by the exact data bus they are connected to and have appropriately named registers. The PORTB module is located on the APB2 bus, and so we use the RCC->APB2ENR to turn on the clock for port B (section 7.3.7 of the manual).

The GPIO block is documented in section 9. We first talk to the low control register (CRL) which controls pins 0-7 of the 16-pin port. There are 4 bits per pin which describe the configuration grouped in to two 2-bit (see how many "2" sounding words I had there?) sections: The Mode and Configuration. The Mode sets the analog/input/output state and the Configuration handles the specifics of the particular mode. We have chosen output (Mode is 0b11) and the 50MHZ-capable output mode (Cfg is 0b00). I'm not fully sure what the 50MHz refers to yet, so I just kept it at 50MHz because that was the default value.

After talking to the CRL, we get to talk to the BSRR register. This register allows us to write a "1" to a bit in the register in order to either set or reset the pin's output value. We start by writing to the BS0 bit to set PB0 high and then writing to the BR0 bit to reset PB0 low. Pretty straightfoward.

It's not a complicated program. Half the battle is knowing where all the pieces fit. The STM32F1Cube zip file contains some examples which could prove quite revealing into the specifics on using the various peripherals on the device. In fact, it includes an entire hardware abstraction layer (HAL) which you could compile into your program if you wanted to. However, I have heard some bad things about it from a software engineering perspective (apparently it's badly written and quite ugly). I'm sure it works, though.

So, the next step is to compile the program. See the makefile in the repository. Basically what we are going to do is first compile the main source file, the assembly file we pulled in from the STM32Cube library, and the C file we pulled in from the STM32Cube library. We will then link them using the linker script from the STM32Cube and then dump the output into a binary file.

1# Makefile for the STM32F103C8 blink program

2#

3# Kevin Cuzner

4#

5

6PROJECT = blink

7

8# Project Structure

9SRCDIR = src

10COMDIR = common

11BINDIR = bin

12OBJDIR = obj

13INCDIR = include

14

15# Project target

16CPU = cortex-m3

17

18# Sources

19SRC = $(wildcard $(SRCDIR)/*.c) $(wildcard $(COMDIR)/*.c)

20ASM = $(wildcard $(SRCDIR)/*.s) $(wildcard $(COMDIR)/*.s)

21

22# Include directories

23INCLUDE = -I$(INCDIR) -Icmsis

24

25# Linker

26LSCRIPT = STM32F103X8_FLASH.ld

27

28# C Flags

29GCFLAGS = -Wall -fno-common -mthumb -mcpu=$(CPU) -DSTM32F103xB --specs=nosys.specs -g -Wa,-ahlms=$(addprefix $(OBJDIR)/,$(notdir $(<:.c=.lst)))

30GCFLAGS += $(INCLUDE)

31LDFLAGS += -T$(LSCRIPT) -mthumb -mcpu=$(CPU) --specs=nosys.specs

32ASFLAGS += -mcpu=$(CPU)

33

34# Flashing

35OCDFLAGS = -f /usr/share/openocd/scripts/interface/stlink-v2.cfg \

36 -f /usr/share/openocd/scripts/target/stm32f1x.cfg \

37 -f openocd.cfg

38

39# Tools

40CC = arm-none-eabi-gcc

41AS = arm-none-eabi-as

42AR = arm-none-eabi-ar

43LD = arm-none-eabi-ld

44OBJCOPY = arm-none-eabi-objcopy

45SIZE = arm-none-eabi-size

46OBJDUMP = arm-none-eabi-objdump

47OCD = openocd

48

49RM = rm -rf

50

51## Build process

52

53OBJ := $(addprefix $(OBJDIR)/,$(notdir $(SRC:.c=.o)))

54OBJ += $(addprefix $(OBJDIR)/,$(notdir $(ASM:.s=.o)))

55

56

57all:: $(BINDIR)/$(PROJECT).bin

58

59Build: $(BINDIR)/$(PROJECT).bin

60

61install: $(BINDIR)/$(PROJECT).bin

62 $(OCD) $(OCDFLAGS)

63

64$(BINDIR)/$(PROJECT).hex: $(BINDIR)/$(PROJECT).elf

65 $(OBJCOPY) -R .stack -O ihex $(BINDIR)/$(PROJECT).elf $(BINDIR)/$(PROJECT).hex

66

67$(BINDIR)/$(PROJECT).bin: $(BINDIR)/$(PROJECT).elf

68 $(OBJCOPY) -R .stack -O binary $(BINDIR)/$(PROJECT).elf $(BINDIR)/$(PROJECT).bin

69

70$(BINDIR)/$(PROJECT).elf: $(OBJ)

71 @mkdir -p $(dir $@)

72 $(CC) $(OBJ) $(LDFLAGS) -o $(BINDIR)/$(PROJECT).elf

73 $(OBJDUMP) -D $(BINDIR)/$(PROJECT).elf > $(BINDIR)/$(PROJECT).lst

74 $(SIZE) $(BINDIR)/$(PROJECT).elf

75

76macros:

77 $(CC) $(GCFLAGS) -dM -E - < /dev/null

78

79cleanBuild: clean

80

81clean:

82 $(RM) $(BINDIR)

83 $(RM) $(OBJDIR)

84

85# Compilation

86$(OBJDIR)/%.o: $(SRCDIR)/%.c

87 @mkdir -p $(dir $@)

88 $(CC) $(GCFLAGS) -c $< -o $@

89

90$(OBJDIR)/%.o: $(SRCDIR)/%.s

91 @mkdir -p $(dir $@)

92 $(AS) $(ASFLAGS) -o $@ $<

93

94

95$(OBJDIR)/%.o: $(COMDIR)/%.c

96 @mkdir -p $(dir $@)

97 $(CC) $(GCFLAGS) -c $< -o $@

98

99$(OBJDIR)/%.o: $(COMDIR)/%.s

100 @mkdir -p $(dir $@)

101 $(AS) $(ASFLAGS) -o $@ $<

The result of this makefile is that it will create a file called "bin/blink.bin" which contains our compiled program. We can then flash this to our microcontroller using openocd.

Step 7: Flashing the program to the microcontroller

Source for this step: https://github.com/rogerclarkmelbourne/Arduino_STM32/wiki/Programming-an-STM32F103XXX-with-a-generic-%22ST-Link-V2%22-programmer-from-Linux

This is the very last step. We get to do some openocd configuration. Firstly, we need to write a small configuration script that will tell openocd how to flash our program. Here it is:

1# Configuration for flashing the blink program

2init

3reset halt

4flash write_image erase bin/blink.bin 0x08000000

5reset run

6shutdown

Firstly, we init and halt the processor (reset halt). When the processor is first powered up, it is going to be running whatever program was previously flashed onto the microcontroller. We want to stop this execution before we overwrite the flash. Next we execute "flash write_image erase" which will first erase the flash memory (if needed) and then write our program to it. After writing the program, we then tell the processor to execute the program we just flashed (reset run) and we shutdown openocd.

Now, openocd requires knowledge of a few things. It first needs to know what programmer to use. Next, it needs to know what device is attached to the programmer. Both of these requirements must be satisfied before we can run our script above. We know that we have an stlinkv2 for a programmer and an stm32f1xx attached on the other end. It turns out that openocd actually comes with configuration files for these. On my installation these are located at "/usr/share/openocd/scripts/interface/stlink-v2.cfg" and "/usr/share/openocd/scripts/target/stm32f1x.cfg", respectively. We can execute all three files (stlink, stm32f1, and our flashing routine (which I have named "openocd.cfg")) with openocd as follows:

1openocd -f /usr/share/openocd/scripts/interface/stlink-v2.cfg \

2 -f /usr/share/openocd/scripts/target/stm32f1x.cfg \

3 -f openocd.cfg

So, small sidenote: If we left off the "shutdown" command, openocd would actually continue running in "daemon" mode, listening for connections to it. If you wanted to use gdb to interact with the program running on the microcontroller, that is what you would use to do it. You would tell gdb that there is a "remote target" at port 3333 (or something like that). Openocd will be listening at that port and so when gdb starts talking to it and trying to issue debug commands, openocd will translate those through the STLinkV2 and send back the translated responses from the microcontroller. Isn't that sick?

In the makefile earlier, I actually made this the "install" target, so running "sudo make install" will actually flash the microcontroller. Here is my output from that command for your reference:

1kcuzner@kcuzner-laptop:~/Projects/ARM/stm32f103-blink$ sudo make install

2arm-none-eabi-gcc -Wall -fno-common -mthumb -mcpu=cortex-m3 -DSTM32F103xB --specs=nosys.specs -g -Wa,-ahlms=obj/system_stm32f1xx.lst -Iinclude -Icmsis -c src/system_stm32f1xx.c -o obj/system_stm32f1xx.o

3arm-none-eabi-gcc -Wall -fno-common -mthumb -mcpu=cortex-m3 -DSTM32F103xB --specs=nosys.specs -g -Wa,-ahlms=obj/main.lst -Iinclude -Icmsis -c src/main.c -o obj/main.o

4arm-none-eabi-as -mcpu=cortex-m3 -o obj/startup_stm32f103x6.o src/startup_stm32f103x6.s

5arm-none-eabi-gcc obj/system_stm32f1xx.o obj/main.o obj/startup_stm32f103x6.o -TSTM32F103X8_FLASH.ld -mthumb -mcpu=cortex-m3 --specs=nosys.specs -o bin/blink.elf

6arm-none-eabi-objdump -D bin/blink.elf > bin/blink.lst

7arm-none-eabi-size bin/blink.elf

8 text data bss dec hex filename

9 1756 1092 1564 4412 113c bin/blink.elf

10arm-none-eabi-objcopy -R .stack -O binary bin/blink.elf bin/blink.bin

11openocd -f /usr/share/openocd/scripts/interface/stlink-v2.cfg -f /usr/share/openocd/scripts/target/stm32f1x.cfg -f openocd.cfg

12Open On-Chip Debugger 0.9.0 (2016-04-27-23:18)

13Licensed under GNU GPL v2

14For bug reports, read

15 http://openocd.org/doc/doxygen/bugs.html

16Info : auto-selecting first available session transport "hla_swd". To override use 'transport select <transport>'.

17Info : The selected transport took over low-level target control. The results might differ compared to plain JTAG/SWD

18adapter speed: 1000 kHz

19adapter_nsrst_delay: 100

20none separate

21Info : Unable to match requested speed 1000 kHz, using 950 kHz

22Info : Unable to match requested speed 1000 kHz, using 950 kHz

23Info : clock speed 950 kHz

24Info : STLINK v2 JTAG v17 API v2 SWIM v4 VID 0x0483 PID 0x3748

25Info : using stlink api v2

26Info : Target voltage: 3.335870

27Info : stm32f1x.cpu: hardware has 6 breakpoints, 4 watchpoints

28target state: halted

29target halted due to debug-request, current mode: Thread

30xPSR: 0x01000000 pc: 0x08000380 msp: 0x20004ffc

31auto erase enabled

32Info : device id = 0x20036410

33Info : flash size = 64kbytes

34target state: halted

35target halted due to breakpoint, current mode: Thread

36xPSR: 0x61000000 pc: 0x2000003a msp: 0x20004ffc

37wrote 3072 bytes from file bin/blink.bin in 0.249272s (12.035 KiB/s)

38shutdown command invoked

39kcuzner@kcuzner-laptop:~/Projects/ARM/stm32f103-blink$

After doing that I saw the following awesomeness:

Wooo!!! The LED blinks! At this point, you have successfully flashed an ARM Cortex-M3 microcontroller with little more than a cheap programmer from eBay, a breakout board, and a few stray wires. Feel happy about yourself.

Conclusion

For me, this marks the end of one journey and the beginning of another. I can now feel free to experiment with ARM microcontrollers without having to worry about ruining a nice shiny development board. I can buy a obscenely powerful $1 STM32 microcontroller from eBay and put it into any project I want. If I were to try to do that with AVRs, I would be stuck with the ultra-low-end 8-pin ATTiny13A since that's about it for ~$1 AVR eBay offerings (don't worry...I've got plenty of ATMega328PB's...though they weren't $1). I sincerely hope that you found this tutorial useful and that it might serve as a springboard for doing your own dev board-free ARM development.

If you have any questions or comments (or want to let me know about any errors I may have made), let me know in the comments section here. I will try my best to help you out, although I can't always find the time to address every issue.

Teensy 3.1 bare metal: Writing a USB driver

One of the things that has intrigued me for the past couple years is making embedded USB devices. It's an industry standard bus that just about any piece of computing hardware can connect with yet is complex enough that doing it yourself is a bit of a chore.

Traditionally I have used the work of others, mainly the V-USB driver for AVR, to get my devices connected. Lately I have been messing around more with the ARM processor on a Teensy 3.1 which has an integrated USB module. The last microcontrollers I used that had these were the PIC18F4550s that I used in my dot matrix project. Even with those, I used microchip's library and drivers.

Over the thanksgiving break I started cobbling together some software with the intent of writing a driver for the USB module in the Teensy myself. I started originally with my bare metal stuff, but I ended up going with something closer to Karl Lunt's solution. I configured code::blocks to use the arm-none-eabi compiler that I had installed and created a code blocks project for my code and used that to build it (with a post-compile event translating the generated elf file into a hex file).

This is a work in progress and the git repository will be updated as things progress since it's not a dedicated demonstration of the USB driver.

The github repository here will be eventually turned in to a really really rudimentary 500-800ksps oscilloscope.

The code: https://github.com/kcuzner/teensy-oscilloscope

The code for this post was taken from the following commit:

https://github.com/kcuzner/teensy-oscilloscope/tree/9a5a4c9108717cfec0174709a72edeab93fcf2b8

At the end of this post, I will have outlined all of the pieces needed to have a simple USB device setup that responds with a descriptor on endpoint 0.

Contents

The Freescale K20 Family and their USB module

Part 3: The interrupt handler state machine

USB Basics

I will actually not be talking about these here as I am most definitely no expert. However, I will point to the page that I found most helpful when writing this: http://www.usbmadesimple.co.uk/index.html

This site explained very clearly exactly what was going on with USB. Coupled with my previous knowledge, it was almost all I needed in terms of getting the protocol.

The Freescale K20 Family and their USB module

The one thing that I don't like about all of these great microcontrollers that come out with USB support is that all of them have their very own special USB module which doesn't work like anyone else. Sure, there are similarities, but there are no two exactly alike. Since I have a Teensy and the K20 family of microcontrollers seem to be relatively popular, I don't feel bad about writing such specific software.

There are two documents I found to be essential to writing this driver:

- The family manual. Getting a correct version for the MK20DX256VLH7 (the processor on the Teensy) can be a pain. PJRC comes to the rescue here: http://www.pjrc.com/teensy/K20P64M72SF1RM.pdf (note, the Teensies based on the MK20DX128VLH5 use a different manual)

- The Kinetis Peripheral Module Quick Reference: http://cache.freescale.com/files/32bit/doc/quick_ref_guide/KQRUG.pdf. This specifies the initialization sequence and other things that will be needed for the module.

There are a few essential parts to understand about the USB module:

- It needs a specific memory layout. Since it doesn't have any dedicated user-accessible memory, it requires that the user specify where things should be. There are specific valid locations for its Buffer Descriptor Table (more on that later) and the endpoint buffers. The last one bit me for several days until I figured it out.

- It has several different clock inputs and all of them must be enabled. Identifying the different signals is the most difficult part. After that, its not hard.

- The module only handles the electrical aspect of things. It doesn't handle sending descriptors or anything like that. The only real things it handles are the signaling levels, responding to USB packets in a valid manner, and routing data into buffers by endpoint. Other than that, its all user software.

- The module can act as both a host (USB On-the-go (OTG)) and a device. We will be exclusively focusing on using it as a device here.

In writing this, I must confess that I looked quite a lot at the Teensyduino code along with the V-USB driver code (even though V-USB is for AVR and is pure software). Without these "references", this would have been a very difficult project. Much of the structure found in the last to parts of this document reflects the Teensyduino USB driver since they did it quite efficiently and I didn't spend a lot of time coming up with a "better" way to do it, given the scope of this project. I will likely make more changes as I customize it for my end use-case.

Part 1: The clocks

The K20 family of microcontrollers utilizes a miraculous hardware module which they call the "Multipurpose Clock Generator" (hereafter called the MCG). This is a module which basically allows the microcontroller to take any clock input between a few kilohertz and several megahertz and transform it into a higher frequency clock source that the microcontroller can actually use. This is how the Teensy can have a rated speed of 96Mhz but only use a 16Mhz crystal. The configuration that this project uses is the Phase Locked Loop (PLL) from the high speed crystal source. The exact setup of this configuration is done by the sysinit code.

The PLL operates by using a divider-multiplier setup where we give it a divisor to divide the input clock frequency by and then a multiplier to multiply that result by to give us the final clock speed. After that, it heads into the System Integration Module (SIM) which distributes the clock. Since the Teensy uses a 16Mhz crystal and we need a 96Mhz system clock (the reason will become apparent shortly), we set our divisor to 4 and our multiplier to 24 (see common.h). If the other type of Teensy 3 is being used (the one with the MK20DX128VLH5), the divisor would be 8 and the multiplier 36 to give us 72Mhz.

Every module on a K20 microcontroller has a gate on its clock. This saves power since there are many modules on the microcontroller that are not being used in any given application. Distributing the clock to each of these is expensive in terms of power and would be wasted if that module wasn't used. The SIM handles this gating in the SIM_SCGC* registers. Before using any module, its clock gate must be enabled. If this is not done, the microcontroller will "crash" and stop executing when it tries to talk to the module registers (I think a handler for this can be specified, but I'm not sure). I had this happen once or twice while messing with this. So, the first step is to "turn on" the USB module by setting the appropriate bit in SIM_SCGC4 (per the family manual mentioned above, page 252):

1SIM_SCGC4 |= SIM_SCGC4_USBOTG_MASK;

Now, the USB module is a bit different than the other modules. In addition to the module clock it needs a reference clock for USB. The USB module requires that this reference clock be at 48Mhz. There are two sources for this clock: an internal source generated by the MCG/SIM or an external source from a pin. We will use the internal source:

1SIM_SOPT2 |= SIM_SOPT2_USBSRC_MASK | SIM_SOPT2_PLLFLLSEL_MASK;

2SIM_CLKDIV2 = SIM_CLKDIV2_USBDIV(1);

The first line here selects that the USB reference clock will come from an internal source. It also specifies that the internal source will be using the output from the PLL in the MCG (the other option is the FLL (frequency lock loop), which we are not using). The second line sets the divider needed to give us 48Mhz from the PLL clock. Once again there are two values: The divider and the multiplier. The multiplier can only be 1 or 2 and the divider can be anywhere from 1 to 16. Since we have a 96Mhz clock, we simply divide by 2 (the value passed is a 1 since 0 = "divide by 1", 1 = "divide by 2", etc). If we were using the 72Mhz clock, we would first multiply by 2 before dividing by 3.

With that, the clock to the USB module has been activated and the module can now be initialized.

Part 2: The startup sequence

The Peripheral Module Quick Reference guide mentioned earlier contains a flowchart which outlines the exact sequence needed to initialize the USB module to act as a device. I don't know if I can copy it here (yay copyright!), but it can be found on page 134, figure 15-6. There is another flowchart specifying the initialization sequence for using the module as a host.

Our startup sequence goes as follows:

1//1: Select clock source

2SIM_SOPT2 |= SIM_SOPT2_USBSRC_MASK | SIM_SOPT2_PLLFLLSEL_MASK; //we use MCGPLLCLK divided by USB fractional divider

3SIM_CLKDIV2 = SIM_CLKDIV2_USBDIV(1); //(USBFRAC + 0)/(USBDIV + 1) = (1 + 0)/(1 + 1) = 1/2 for 96Mhz clock

4

5//2: Gate USB clock

6SIM_SCGC4 |= SIM_SCGC4_USBOTG_MASK;

7

8//3: Software USB module reset

9USB0_USBTRC0 |= USB_USBTRC0_USBRESET_MASK;

10while (USB0_USBTRC0 & USB_USBTRC0_USBRESET_MASK);

11

12//4: Set BDT base registers

13USB0_BDTPAGE1 = ((uint32_t)table) >> 8; //bits 15-9

14USB0_BDTPAGE2 = ((uint32_t)table) >> 16; //bits 23-16

15USB0_BDTPAGE3 = ((uint32_t)table) >> 24; //bits 31-24

16

17//5: Clear all ISR flags and enable weak pull downs

18USB0_ISTAT = 0xFF;

19USB0_ERRSTAT = 0xFF;

20USB0_OTGISTAT = 0xFF;

21USB0_USBTRC0 |= 0x40; //a hint was given that this is an undocumented interrupt bit

22

23//6: Enable USB reset interrupt

24USB0_CTL = USB_CTL_USBENSOFEN_MASK;

25USB0_USBCTRL = 0;

26

27USB0_INTEN |= USB_INTEN_USBRSTEN_MASK;

28//NVIC_SET_PRIORITY(IRQ(INT_USB0), 112);

29enable_irq(IRQ(INT_USB0));

30

31//7: Enable pull-up resistor on D+ (Full speed, 12Mbit/s)

32USB0_CONTROL = USB_CONTROL_DPPULLUPNONOTG_MASK;

The first two steps were covered in the last section. The next one is relatively straightfoward: We ask the module to perform a "reset" on itself. This places the module to its initial state which allows us to configure it as needed. I don't know if the while loop is necessary since the manual says that the reset bit always reads low and it only says we must "wait two USB clock cycles". In any case, enough of a wait seems to be executed by the above code to allow it to reset properly.

The next section (4: Set BDT base registers) requires some explanation. Since the USB module doesn't have a dedicated memory block, we have to provide it. The BDT is the "Buffer Descriptor Table" and contains 16 * 4 entries that look like so:

1typedef struct {

2 uint32_t desc;

3 void* addr;

4} bdt_t;

"desc" is a descriptor for the buffer and "addr" is the address of the buffer. The exact bits of the "desc" are explained in the manual (p. 971, Table 41-4), but they basically specify ownership of the buffer (user program or USB module) and the USB token that generated the data in the buffer (if applicable).

Each entry in the BDT corresponds to one of 4 buffers in one of the 16 USB endpoints: The RX even, RX odd, TX even, and TX odd. The RX and TX are pretty self explanatory...the module needs somewhere to read the data its going to send and somewhere to write the data it just received. The even and odd are a configuration that I have seen before in the PIC 18F4550 USB module: Ping-pong buffers. While one buffer is being sent/received by the module, the other can be in use by user code reading/writing (ping). When the user code is done with its buffers, it swaps buffers, giving the usb module control over the ones it was just using (pong). This allows seamless communication between the host and the device and minimizes the need for copying data between buffers. I have declared the BDT in my code as follows:

1#define BDT_INDEX(endpoint, tx, odd) ((endpoint << 2) | (tx << 1) | odd)

2__attribute__ ((section(".usbdescriptortable"), used))

3static bdt_t table[(USB_N_ENDPOINTS + 1)*4]; //max endpoints is 15 + 1 control

One caveat of the BDT is that it must be aligned with a 512-byte boundary in memory. Our code above showed that only 3 bytes of the 4 byte address of "table" are passed to the module. This is because the last byte is basically the index along the table (the specification of this is found in section 41.4.3, page 970 of the manual). The #define directly above the declaration is a helper macro for referencing entries in the table for specific endpoints (this is used later in the interrupt). Now, accomplishing this boundary alignment requires some modification of the linker script. Before this, I had never had any need to modify a linker script. We basically need to create a special area of memory (in the above, it is called ".usbdescriptortable" and the attribute declaration tells the compiler to place that variable's reference inside of it) which is aligned to a 512-byte boundary in RAM. I declared mine like so:

1.usbdescriptortable (NOLOAD) : {

2 . = ALIGN(512);

3 *(.usbdescriptortable*)

4} > sram

The position of this in the file is mildly important, so looking at the full linker script would probably be good. This particular declaration I more or less lifted from the Teensyduino linker script, with some changes to make it fit into my linker script.

Steps 5-6 set up the interrupts. There is only one USB interrupt, but there are two registers of flags. We first reset all of the flags. Interestingly, to reset a flag we write back a '1' to the particular flag bit. This has the effect of being able to set a flag register to itself to reset all of the flags since a flag bit is '1' when it is triggered. After resetting the flags, we enable the interrupt in the NVIC (Nested Vector Interrupt Controller). I won't discuss the NVIC much, but it is a fairly complex piece of hardware. It has support for lots and lots of interrupts (over 100) and separate priorities for each one. I don't have reliable code for setting interrupt priorities yet, but eventually I'll get around to messing with that. The "enable_irq()" call is a function that is provided in arm_cm4.c and all that it does is enable the interrupt specified by the passed vector number. These numbers are specified in the datasheet, but we have a #define specified in the mk20d7 header file (warning! 12000 lines ahead) which gives us the number.

The very last step in initialization is to set the internal pullup on D+. According to the USB specification, a pullup on D- specifies a low speed device (1.2Mbit/s) and a pullup on D+ specifies a full speed device (12Mbit/s). We want to use the higher speed grade. The Kinetis USB module does not support high speed (480Mbit/s) mode.

Part 3: The interrupt handler state machine

The USB protocol can be interpreted in the context of a state machine with each call to the interrupt being a "tick" in the machine. The interrupt handler must process all of the flags to determine what happened and where to go from there.

1#define ENDP0_SIZE 64

2

3/**

4 * Endpoint 0 receive buffers (2x64 bytes)

5 */

6static uint8_t endp0_rx[2][ENDP0_SIZE];

7

8//flags for endpoint 0 transmit buffers

9static uint8_t endp0_odd, endp0_data = 0;

10

11/**

12 * Handler functions for when a token completes

13 * TODO: Determine if this structure really will work for all kinds of handlers

14 *

15 * I hope this looks like a dynamic jump table to the compiler

16 */

17static void (*handlers[USB_N_ENDPOINTS + 2]) (uint8_t);

18

19void USBOTG_IRQHandler(void)

20{

21 uint8_t status;

22 uint8_t stat, endpoint;

23

24 status = USB0_ISTAT;

25

26 if (status & USB_ISTAT_USBRST_MASK)

27 {

28 //handle USB reset

29

30 //initialize endpoint 0 ping-pong buffers

31 USB0_CTL |= USB_CTL_ODDRST_MASK;

32 endp0_odd = 0;

33 table[BDT_INDEX(0, RX, EVEN)].desc = BDT_DESC(ENDP0_SIZE, 0);

34 table[BDT_INDEX(0, RX, EVEN)].addr = endp0_rx[0];

35 table[BDT_INDEX(0, RX, ODD)].desc = BDT_DESC(ENDP0_SIZE, 0);

36 table[BDT_INDEX(0, RX, ODD)].addr = endp0_rx[1];

37 table[BDT_INDEX(0, TX, EVEN)].desc = 0;

38 table[BDT_INDEX(0, TX, ODD)].desc = 0;

39

40 //initialize endpoint0 to 0x0d (41.5.23)

41 //transmit, recieve, and handshake

42 USB0_ENDPT0 = USB_ENDPT_EPRXEN_MASK | USB_ENDPT_EPTXEN_MASK | USB_ENDPT_EPHSHK_MASK;

43

44 //clear all interrupts...this is a reset

45 USB0_ERRSTAT = 0xff;

46 USB0_ISTAT = 0xff;

47

48 //after reset, we are address 0, per USB spec

49 USB0_ADDR = 0;

50

51 //all necessary interrupts are now active

52 USB0_ERREN = 0xFF;

53 USB0_INTEN = USB_INTEN_USBRSTEN_MASK | USB_INTEN_ERROREN_MASK |

54 USB_INTEN_SOFTOKEN_MASK | USB_INTEN_TOKDNEEN_MASK |

55 USB_INTEN_SLEEPEN_MASK | USB_INTEN_STALLEN_MASK;

56

57 return;

58 }

59 if (status & USB_ISTAT_ERROR_MASK)

60 {

61 //handle error

62 USB0_ERRSTAT = USB0_ERRSTAT;

63 USB0_ISTAT = USB_ISTAT_ERROR_MASK;

64 }

65 if (status & USB_ISTAT_SOFTOK_MASK)

66 {

67 //handle start of frame token

68 USB0_ISTAT = USB_ISTAT_SOFTOK_MASK;

69 }

70 if (status & USB_ISTAT_TOKDNE_MASK)

71 {

72 //handle completion of current token being processed

73 stat = USB0_STAT;

74 endpoint = stat >> 4;

75 handlers[endpoint](stat);

76

77 USB0_ISTAT = USB_ISTAT_TOKDNE_MASK;

78 }

79 if (status & USB_ISTAT_SLEEP_MASK)

80 {

81 //handle USB sleep

82 USB0_ISTAT = USB_ISTAT_SLEEP_MASK;

83 }

84 if (status & USB_ISTAT_STALL_MASK)

85 {

86 //handle usb stall

87 USB0_ISTAT = USB_ISTAT_STALL_MASK;

88 }

89}

The above code will be executed whenever the IRQ for the USB module fires. This function is set up in the crt0.S file, but with a weak reference, allowing us to override it easily by simply defining a function called USBOTG_IRQHandler. We then proceed to handle all of the USB interrupt flags. If we don't handle all of the flags, the interrupt will execute again, giving us the opportunity to fully process all of them.

Reading through the code is should be obvious that I have not done much with many of the flags, including USB sleep, errors, and stall. For the purposes of this super simple driver, we really only care about USB resets and USB token decoding.

The very first interrupt that we care about which will be called when we connect the USB device to a host is the Reset. The host performs this by bringing both data lines low for a certain period of time (read the USB basics stuff for more information). When we do this, we need to reset our USB state into its initial and ready state. We do a couple things in sequence:

- Initialize the buffers for endpoint 0. We set the RX buffers to point to some static variables we have defined which are simply uint8_t arrays of length "ENDP0_SIZE". The TX buffers are reset to null since nothing is going to be transmitted. One thing to note is that the ODDRST bit is flipped on in the USB0_CTL register. This is very important since it "syncronizes" the USB module with our code in terms of knowing whether the even or odd buffer should be used next for transmitting. When we do ODDRST, it sets the next buffer to be used to be the even buffer. We have a "user-space" flag (endp0_odd) which we reset at the same time so that we stay in sync with the buffer that the USB module is going to use.

- We enable endpoint 0. Specifically, we say that it can transmit, receive, and handshake. Enabled endpoints always handshake, but endpoints can either send, receive, or both. Endpoint 0 is specified as a reading and writing endpoint in the USB specification. All of the other endpoints are device-specific.

- We clear all of the interrupts. If this is a reset we obviously won't be doing much else.

- Set our USB address to 0. Each device on the USB bus gets an address between 0 and 127. Endpoint 0 is reserved for devices that haven't been assigned an address yet (i.e. have been reset), so that becomes our address. We will receive an address later via a command sent to endpoint 0.

- Activate all necessary interrupts. In the previous part where we discussed the initialization sequence we only enabled the reset interrupt. After being reset, we get to enable all of the interrupts that we will need to be able to process USB events.

After a reset the USB module will begin decoding tokens. While there are a couple different types of tokens, the USB module has a single interrupt for all of them. When a token is decoded the module gives us information about what endpoint the token was for and what BDT entry should be used. This information is contained in the USB0_STAT register.

The exact method for processing these tokens is up to the individual developer. My choice for the moment was to make a dynamic jump table of sorts which stores 16 function pointers which will be called in order to process the tokens. Initially, these pointers point to dummy functions that do nothing. The code for the endpoint 0 handler will be discussed in the next section.

Our code here uses USB0_STAT to determine which endpoint the token was decoded for, finds the appropriate function pointer, and calls it with the value of USB0_STAT.

Part 4: Token processing & descriptors

This is one part of the driver that isn't something that must be done a certain way, but however it is done, it must accomplish the task correctly. My super-simple driver processes this in two stages: Processing the token type and processing the token itself.

As mentioned in the previous section, I had a handler for each endpoint that would be called after a token was decoded. The handler for endpoint 0 is as follows:

1#define PID_OUT 0x1

2#define PID_IN 0x9

3#define PID_SOF 0x5

4#define PID_SETUP 0xd

5

6typedef struct {

7 union {

8 struct {

9 uint8_t bmRequestType;

10 uint8_t bRequest;

11 };

12 uint16_t wRequestAndType;

13 };

14 uint16_t wValue;

15 uint16_t wIndex;

16 uint16_t wLength;

17} setup_t;

18

19/**

20 * Endpoint 0 handler

21 */

22static void usb_endp0_handler(uint8_t stat)

23{

24 static setup_t last_setup;

25

26 //determine which bdt we are looking at here

27 bdt_t* bdt = &table[BDT_INDEX(0, (stat & USB_STAT_TX_MASK) >> USB_STAT_TX_SHIFT, (stat & USB_STAT_ODD_MASK) >> USB_STAT_ODD_SHIFT)];

28

29 switch (BDT_PID(bdt->desc))

30 {

31 case PID_SETUP:

32 //extract the setup token

33 last_setup = *((setup_t*)(bdt->addr));

34

35 //we are now done with the buffer

36 bdt->desc = BDT_DESC(ENDP0_SIZE, 1);

37

38 //clear any pending IN stuff

39 table[BDT_INDEX(0, TX, EVEN)].desc = 0;

40 table[BDT_INDEX(0, TX, ODD)].desc = 0;

41 endp0_data = 1;

42

43 //run the setup

44 usb_endp0_handle_setup(&last_setup);

45

46 //unfreeze this endpoint

47 USB0_CTL = USB_CTL_USBENSOFEN_MASK;

48 break;

49 case PID_IN:

50 if (last_setup.wRequestAndType == 0x0500)

51 {

52 USB0_ADDR = last_setup.wValue;

53 }

54 break;

55 case PID_OUT:

56 //nothing to do here..just give the buffer back

57 bdt->desc = BDT_DESC(ENDP0_SIZE, 1);

58 break;

59 case PID_SOF:

60 break;

61 }

62

63 USB0_CTL = USB_CTL_USBENSOFEN_MASK;

64}

The very first step in handling a token is determining the buffer which contains the data for the token transmitted. This is done by the first statement which finds the appropriate address for the buffer in the table using the BDT_INDEX macro which simply implements the addressing form found in Figure 41-3 in the family manual.

After determining where the data received is located, we need to determine which token exactly was decoded. We only do things with four of the tokens. Right now, if a token comes through that we don't understand, we don't really do anything. My thought is that I should be initiating an endpoint stall, but I haven't seen anywhere that specifies what exactly I should do for an unrecognized token.

The main token that we care about with endpoint 0 is the SETUP token. The data attached to this token will be in the format described by setup_t, so the first step is that we dereference and cast the buffer into which the data was loaded into a setup_t. This token will be stored statically since we need to look at it again for tokens that follow, especially in the case of the IN token following the request to be assigned an address.

One part of processing a setup token that tripped me up for a while was what the next DATA state should be. The USB standard specifies that the data in a frame is either marked DATA0 or DATA1 and it alternates by frame. This information is stored in a flag that the USB module will read from the first 4 bytes of the BDT (the "desc" field). Immediately following a SETUP token, the next DATA transmitted must be a DATA1.

After this, the setup function is run (more on that next) and as a final step, the USB module is "unfrozen". Whenever a token is being processed, the USB module "freezes" so that processing can occur. While I haven't yet read enough documentation on the subject, it seems to me that this is to give the user program some time to actually handle a token before the USB module decodes another one. I'm not sure what happens if the user program takes to long, but I imagine some error flag will go off.

The guts of handling a SETUP request are as follows:

1typedef struct {

2 uint8_t bLength;

3 uint8_t bDescriptorType;

4 uint16_t bcdUSB;

5 uint8_t bDeviceClass;

6 uint8_t bDeviceSubClass;

7 uint8_t bDeviceProtocol;

8 uint8_t bMaxPacketSize0;

9 uint16_t idVendor;

10 uint16_t idProduct;

11 uint16_t bcdDevice;

12 uint8_t iManufacturer;

13 uint8_t iProduct;

14 uint8_t iSerialNumber;

15 uint8_t bNumConfigurations;

16} dev_descriptor_t;

17

18typedef struct {

19 uint8_t bLength;

20 uint8_t bDescriptorType;

21 uint8_t bInterfaceNumber;

22 uint8_t bAlternateSetting;

23 uint8_t bNumEndpoints;

24 uint8_t bInterfaceClass;

25 uint8_t bInterfaceSubClass;

26 uint8_t bInterfaceProtocol;

27 uint8_t iInterface;

28} int_descriptor_t;

29

30typedef struct {

31 uint8_t bLength;

32 uint8_t bDescriptorType;