Kevin Cuzner's Personal Blog

Electronics, Embedded Systems, and Software are my breakfast, lunch, and dinner.

A New Blog

For the past several years I've struggled with maintaining the wordpress blog on this site. Starting 3 or 4 years ago the blog began to receive sustained stronger than usual traffic from data centers trying to post comments or just brute-forcing the admin login page. This peaked around 2021 when I finally decided to turn off all commenting functionality. This cooled things down except for requiring me to "kick" the server every few days as it ran out of file handles due to some misconfiguration on my part combined with the spam traffic.

This became annoying and I needed to find something that would be even lower maintenance. To that end, I've written my first functioning blogging software since 2006 or so. There is no dynamic content, all of it is statically rendered, and the blog content itself is stored in GitHub with updates managed using GitHub hooks. The content itself is written with ReStructured Text and I think I've created a pretty easy-to-use and low-maintenance blog platform (famous last words). Ask me in a decade if I was actually successful lol.

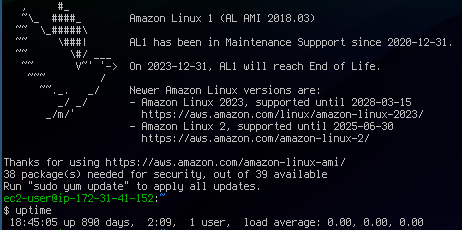

In addition, I've found that there are newer, cheaper AWS instances that would suit my needs. I started using this instance over 10 years ago and before I shut it down, I had an uptime of almost 2.5 years. It's truly the end of an era:

Extreme Attributed Metadata with Autofac

Introduction

If you are anything like me, you love reflection in any programming language. For the last two years or so I have been writing code for work almost exclusively in C# and have found its reflection system to be a pleasure to use. Its simple, can be fast, and can do so much.

I recently started using Autofac at work to help achieve Inversion of Control within our projects. It has honestly been the most life changing C# library (sorry Autofac, jQuery and Knockout still take the cake for "life-changing in all languages") I have ever used and has changed the way I decompose problems when writing programs.

This article will cover some very interesting features of the Autofac Attributed Metadata module. It is a little lengthy, so I have here what will be covered:

- What is autofac?

- Attributed Metadata: The Basics

- The IMetadataProvider interface

- IMetadataProvider: Making a set of objects

- IMetadataProvider: Hierarchical Metadata

What is Autofac?

This post assumes that the reader is at least passingly familiar with Autofac. However, I will make a short introduction: Autofac allows you to "compose" your program structure by "registering" components and then "resolving" them at runtime. The idea is that you define an interface for some object that does "something" and create one or more classes that implement that interface, each accomplishing the "something" in their own way. Your parent class, which needs to have one of those objects for doing that "something" will ask the Autofac container to "resolve" the interface. Autofac will give back either one of your implementations or an IEnumerable of all of your implementations (depending on how you ask it to resolve). The "killer feature" of Autofac, IMO, is being able to use constructor arguments to recursively resolve the "dependencies" of an object. If you want an implementation of an interface passed into your object when it is resolved, just put the interface in the constructor arguments and when your object is resolved by Autofac, Autofac will resolve that interface for you and pass it in to your constructor. Now, this article isn't meant to introduce Autofac, so I would definitely recommend reading up on the subject.

Attributed Metadata: The Basics

One of my most favorite features has been Attributed Metadata. Autofac allows Metadata to be included with objects when they are resolved. Metadata allows one to specify some static parameters that are associated with a particular implementation of something registered with the container. This Metadata is normally created during registration of the particular class and, without this module, must be done "manually". The Attributed Metadata module allows one to use custom attributes to specify the Metadata for the class rather than needing to specify it when the class is registered. This is an absurdly powerful feature which allows for doing some pretty interesting things.

For my example I will use a "extendible" letter formatting program that adds some text to the content of a "letter". I define the following interface:

1interface ILetterFormatter

2{

3 string FormatLetter(string content);

4}

This interface is for something that can "format" a letter in some way. For starters, I will define two implementations:

1class ImpersonalLetterFormatter : ILetterFormatter

2{

3 public string MakeLetter(string content)

4 {

5 return "To Whom It May Concern:nn" + content;

6 }

7}

8

9class PersonalLetterFormatter : ILetterFormatter

10{

11 public string MakeLetter(string content)

12 {

13 return "Dear Individual,nn" + content;

14 }

15}

Now, here is a simple program that will use these formatters:

1class MainClass

2{

3 public static void Main (string[] args)

4 {

5 var builder = new ContainerBuilder();

6

7 //register all ILetterFormatters in this assembly

8 builder.RegisterAssemblyTypes(typeof(MainClass).Assembly)

9 .Where(c => c.IsAssignableTo<ILetterFormatter>())

10 .AsImplementedInterfaces();

11

12 var container = builder.Build();

13

14 using (var scope = container.BeginLifetimeScope())

15 {

16 //resolve all formatters

17 IEnumerable<ILetterFormatter> formatters = scope.Resolve<IEnumerable<ILetterFormatter>>();

18

19 //What do we do now??? So many formatters...which is which?

20 }

21 }

22}

Ok, so we have ran into a problem: We have a list of formatters, but we don't know which is which. There are a couple different solutions:

- Use the "is" test or do a "soft cast" using the "as" operator to a specific type. This is bad because it requires that the resolver know about the specific implementations of the interface (which is what we are trying to avoid)

- Just choose one based on order. This is bad because the resolution order is just as guaranteed as reflection order in C#...which is not guaranteed at all. We can't be sure they will be resolved in the same order each time.

- Use metadata at registration time and resolve it with metadata. The issue here is that if we used RegisterAssemblyTyps like above, it makes registration difficult. Also, once we get any sizable number of things registered with metadata, it becomes unmanageable IMO.

- Use attributed metadata! Example follows...

We define another class:

1[MetadataAttribute]

2sealed class LetterFormatterAttribute : Attribute

3{

4 public string Name { get; private set; }

5

6 public LetterFormatterAttribute(string name)

7 {

8 this.Name = name;

9 }

10}

Marking it with System.ComponetModel.Composition.MetadataAttributeAttribute (no, that's not a typo) will make the Attributed Metadata module place the public properties of the Attribute into the metadata dictionary that is associated with the class at registration time.

We mark the classes as follows:

1[LetterFormatter("Impersonal")]

2class ImpersonalLetterFormatter : ILetterFormatter

3...

4

5[LetterFormatter("Personal")]

6class PersonalLetterFormatter : ILetterFormatter

7...

And then we change the builder to take into account the metadata by asking it to register the Autofac.Extras.Attributed.AttributedMetadataModule. This will cause the Attributed Metadata extensions to scan all of the registered types (past, present, and future) for MetadataAttribute-marked attributes and use the public properties as metadata:

1var builder = new ContainerBuilder();

2

3builder.RegisterModule<AttributedMetadataModule>();

4

5builder.RegisterAssemblyTypes(typeof(MainClass).Assembly)

6 .Where(c => c.IsAssignableTo<ILetterFormatter>())

7 .AsImplementedInterfaces();

Now, when we resolve the ILetterFormatter classes, we can either use Autofac.Features.Meta<TImplementation> or Autofac.Features.Meta<TImplementation, TMetadata>. I'm a personal fan of the "strong" metadata, or the latter. It causes the metadata dictionary to be "forced" into a class rather than just directly accessing the metadata dictionary. This removes any uncertainty about types and such. So, I will create a class that will hold the metadata when the implementations are resolved:

1class LetterMetadata

2{

3 public string Name { get; set; }

4}

It would worthwhile to note that the individual properties must have a value in the metadata dictionary unless the DefaultValue attribute is applied to the property. For example, if I had an integer property called Foo an exception would be thrown when metadata was resolved since I have no corresponding Foo metadata. However, if I put DefaultValue(6) on the Foo property, no exception would be thrown and Foo would be set to 6.

So, we now have the following inside our using statement that controls our scope in the main method:

1//resolve all formatters

2IEnumerable<Meta<ILetterFormatter, LetterMetadata>> formatters = scope.Resolve<IEnumerable<Meta<ILetterFormatter, LetterMetadata>>>();

3

4//we will ask how the letter should be formatted

5Console.WriteLine("Formatters:");

6foreach (var formatter in formatters)

7{

8 Console.Write("- ");

9 Console.WriteLine(formatter.Metadata.Name);

10}

11

12ILetterFormatter chosen = null;

13while (chosen == null)

14{

15 Console.WriteLine("Choose a formatter:");

16 string name = Console.ReadLine();

17 chosen = formatters.Where(f => f.Metadata.Name == name).Select(f => f.Value).FirstOrDefault();

18

19 if (chosen == null)

20 Console.WriteLine(string.Format("Invalid formatter: {0}", name));

21}

22

23//just for kicks, we say the first argument is our letter, so we format it and output it to the console

24Console.WriteLine(chosen.FormatLetter(args[0]));

The IMetadataProvider Interface

So, in the contrived example above, we were able to identify a class based solely on its metadata rather than doing type checking. What's more, we were able to define the metadata through attributes. However, this is old hat for Autofac. This feature has been around for a while.

When I was at work the other day, I needed to be able to handle putting sets of things into metadata (such as a list of strings). Autofac makes no prohibition on this in its metadata dictionary. The dictionary is of the type IDictionary<string, object>, so it can hold pretty much anything, including arbitrary objects. The problem is that the Attributed Metadata module had no way to do this easily. Attributes can only take certain types as constructor arguments and that seriously places a limit on what sort of things could be put into metadata via attributes easily.

I decided to remedy this and after submitting an idea for autofac via a pull request, having some discussion, changing the exact way to accomplish this goal, and fixing things up, my pull request was merged into autofac which resulted in a new feature: The IMetadataProvider interface. This interface provides a way for metadata attributes to control how exactly they produce metadata. By default, the attribute would just have its properties scanned. However, if the attribute implemented the IMetadataProvider interface, a method will be called to get the metadata dictionary rather than doing the property scan. When an IMetadataProvider is found, the GetMetadata(Type targetType) method will be called with the first argument set to the type that is being registered. This allows the IMetadataProvider the opportunity to know which class it is actually applied to; something normally not possible without explicitly passing the attribute a Type in a constructor argument.

To get an idea of what this would look like, here is a metadata attribute which implements this interface:

1[MetadataAttribute]

2class LetterFormatterAttribute : Attribute, IMetadataProvider

3{

4 public string Name { get; private set; }

5

6 public LetterFormatterAttribute(string name)

7 {

8 this.Name = name;

9 }

10

11 #region IMetadataProvider implementation

12

13 public IDictionary<string, object> GetMetadata(Type targetType)

14 {

15 return new Dictionary<string, object>()

16 {

17 { "Name", this.Name }

18 };

19 }

20

21 #endregion

22}

This metadata doesn't do much more than the original. It actually returns exactly what would be created via property scanning. However, this allows much more flexibility in how MetadataAttributes can provide metadata. They can scan the type for other attributes, create arbitrary objects, and many other fun things that I can't even think of.

IMetadataProvider: Making a set of objects

Perhaps the simplest application of this new IMetadataProvider is having the metadata contain a list of objects. Building on our last example, we saw that the "personal" letter formatter just said "Dear Individual" every time. What if we could change that so that there was some way to pass in some "properties" or "options" provided by the caller of the formatting function? We can do this using an IMetadataProvider. We make the following changes:

1class FormatOptionValue

2{

3 public string Name { get; set; }

4 public object Value { get; set; }

5}

6

7interface IFormatOption

8{

9 string Name { get; }

10 string Description { get; }

11}

12

13interface IFormatOptionProvider

14{

15 IFormatOption GetOption();

16}

17

18interface ILetterFormatter

19{

20 string FormatLetter(string content, IEnumerable<FormatOptionValue> options);

21}

22

23[MetadataAttribute]

24sealed class LetterFormatterAttribute : Attribute, IMetadataProvider

25{

26 public string Name { get; private set; }

27

28 public LetterFormatterAttribute(string name)

29 {

30 this.Name = name;

31 }

32

33 public IDictionary<string, object> GetMetadata(Type targetType)

34 {

35 var options = targetType.GetCustomAttributes(typeof(IFormatOptionProvider), true)

36 .Cast<IFormatOptionProvider>()

37 .Select(p => p.GetOption())

38 .ToList();

39

40 return new Dictionary<string, object>()

41 {

42 { "Name", this.Name },

43 { "Options", options }

44 };

45 }

46}

47

48//note the lack of the [MetadataAttribute] here. We don't want autofac to scan this for properties

49[AttributeUsage(AttributeTargets.Class, AllowMultiple = true)]

50sealed class StringOptionAttribute : Attribute, IFormatOptionProvider

51{

52 public string Name { get; private set; }

53

54 public string Description { get; private set; }

55

56 public StringOptionAttribute(string name, string description)

57 {

58 this.Name = name;

59 this.Description = description;

60 }

61

62 public IFormatOption GetOption()

63 {

64 return new StringOption()

65 {

66 Name = this.Name,

67 Description = this.Description

68 };

69 }

70}

71

72public class StringOption : IFormatOption

73{

74 public string Name { get; set; }

75

76 public string Description { get; set; }

77

78 //note that we could easily define other properties that

79 //do not appear in the interface

80}

81

82class LetterMetadata

83{

84 public string Name { get; set; }

85

86 public IEnumerable<IFormatOption> Options { get; set; }

87}

Ok, so this is just a little bit more complicated. There are two changes to pay attention to: Firstly, the FormatLetter function now takes a list of FormatOptionValues. The second change is what enables the caller of FormatLetter to know which options to pass in. The LetterFormatterAttribute now scans the type in order to construct its metadata dictionary by looking for attributes that describe what options it needs. I feel like the usage of this is best illustrated by decorating our PersonalLetterFormatter for it to have some metadata describing the options that it requires:

1[LetterFormatter("Personal")]

2[StringOption(ToOptionName, "Name of the individual to address the letter to")]

3class PersonalLetterFormatter : ILetterFormatter

4{

5 const string ToOptionName = "To";

6

7 public string FormatLetter(string content, IEnumerable<FormatOptionValue> options)

8 {

9 var toName = options.Where(o => o.Name == ToOptionName).Select(o => o.Value).FirstOrDefault() as string;

10 if (toName == null)

11 throw new ArgumentException("The " + ToOptionName + " string option is required");

12

13 return "Dear " + toName + ",nn" + content;

14 }

15}

When the metadata for the PersonalLetterFormatter is resolved, it will contain an IFormatOption which represents the To option. The resolver can attempt to cast the IFormatOption to a StringOption to find out what type it should pass in using the FormatOptionValue.

This can be extended quite easily for other IFormatOptionProviders and IFormatOption pairs, making for a very extensible way to easily declare metadata describing a set of options attached to a class.

IMetadataProvider: Hierarchical Metadata

The last example showed that the IMetadataProvider could be used to scan the class to provide metadata into a structure containing an IEnumerable of objects. It is a short leap to see that this could be used to create hierarchies of arbitrary objects.

For now, I won't provide a full example of how this could be done, but in the future I plan on having a gist or something showing arbitrary metadata hierarchy creation.

Conclusion

I probably use Metadata more than I should in Autofac. With the addition of the IMetadataProvider I feel like its quite easy to define complex metadata and use it with Autofac's natural constructor injection system. Overall, the usage of metadata & reflection in my programs has made them quite a bit more flexible and extendable and I feel like Autofac and its metadata system complement the built in reflection system of C# quite well.

Database Abstraction in Python

As I was recently working on trying out the Flask web framework for Python, I ended up wanting to access my MySQL database. Recently at work I have been using entity framework and I have gotten quite used to having a good database abstraction that allows programmatic creation of SQL. While such frameworks exist in Python, I thought it would interesting to try writing one. This is one great example of getting carried away with a seemingly simple task.

I aimed for these things:

- Tables should be represented as objects which each instance of the object representing a row

- These objects should be able to generate their own insert, select, and update queries

- Querying the database should be accomplished by logical predicates, not by strings

- Update queries should be optimized to only update those fields which have changed

- The database objects should have support for "immutable" fields that are generated by the database

I also wanted to be able to do relations between tables with foreign keys, but I have decided to stop for now on that. I have a structure outlined, but it isn't necessary enough at this point since all I wanted was a database abstraction for my simple Flask project. I will probably implement it later.

This can be found as a gist here: https://gist.github.com/kcuzner/5246020

Example

Before going into the code, here is an example of what this abstraction can do as it stands. It directly uses the DbObject and DbQuery-inheriting objects which are shown further down in this post.

1from db import *

2import hashlib

3

4def salt_password(user, unsalted):

5 if user is None:

6 return unsalted

7 m = hashlib.sha512()

8 m.update(user.username)

9 m.update(unsalted)

10 return m.hexdigest()

11

12class User(DbObject):

13 dbo_tablename = "users"

14 primary_key = IntColumn("id", allow_none=True, mutable=False)

15 username = StringColumn("username", "")

16 password = PasswordColumn("password", salt_password, "")

17 display_name = StringColumn("display_name", "")

18 def __init__(self, **kwargs):

19 DbObject.__init__(self, **kwargs)

20 @classmethod

21 def load(self, cur, username):

22 selection = self.select('u')

23 selection[0].where(selection[1].username == username)

24 result = selection[0].execute(cur)

25 if len(result) == 0:

26 return None

27 else:

28 return result[0]

29 def match_password(self, password):

30 salted = salt_password(self, password)

31 return salted == self.password

32

33#assume there is a function get_db defined which returns a PEP-249

34#database object

35def main():

36 db = get_db()

37 cur = db.cursor()

38 user = User.load(cur, "some username")

39 user.password = "a new password!"

40 user.save(cur)

41 db.commit()

42

43 new_user = User(username="someone", display_name="Their name")

44 new_user.password = "A password that will be hashed"

45 new_user.save(cur)

46 db.commmit()

47

48 print new_user.primary_key # this will now have a database assigned id

This example first loads a user using a DbSelectQuery. The user is then modified and the DbObject-level function save() is used to save it. Next, a new user is created and saved using the same function. After saving, the primary key will have been populated and will be printed.

Change Tracking Columns

I started out with columns. I needed columns that track changes and have a mapping to an SQL column name. I came up with the following:

1class ColumnSet(object):

2 """

3 Object which is updated by ColumnInstances to inform changes

4 """

5 def __init__(self):

6 self.__columns = {} # columns are sorted by name

7 i_dict = type(self).__dict__

8 for attr in i_dict:

9 obj = i_dict[attr]

10 if isinstance(obj, Column):

11 # we get an instance of this column

12 self.__columns[obj.name] = ColumnInstance(obj, self)

13

14 @property

15 def mutated(self):

16 """

17 Returns the mutated columns for this tracker.

18 """

19 output = []

20 for name in self.__columns:

21 column = self.get_column(name)

22 if column.mutated:

23 output.append(column)

24 return output

25

26 def get_column(self, name):

27 return self.__columns[name]

28

29class ColumnInstance(object):

30 """

31 Per-instance column data. This is used in ColumnSet objects to hold data

32 specific to that particular instance

33 """

34 def __init__(self, column, owner):

35 """

36 column: Column object this is created for

37 initial: Initial value

38 """

39 self.__column = column

40 self.__owner = owner

41 self.update(column.default)

42

43 def update(self, value):

44 """

45 Updates the value for this instance, resetting the mutated flag

46 """

47 if value is None and not self.__column.allow_none:

48 raise ValueError("'None' is invalid for column '" +

49 self.__column.name + "'")

50 if self.__column.validate(value):

51 self.__value = value

52 self.__origvalue = value

53 else:

54 raise ValueError("'" + str(value) + "' is not valid for column '" +

55 self.__column.name + "'")

56

57 @property

58 def column(self):

59 return self.__column

60

61 @property

62 def owner(self):

63 return self.__owner

64

65 @property

66 def mutated(self):

67 return self.__value != self.__origvalue

68

69 @property

70 def value(self):

71 return self.__value

72

73 @value.setter

74 def value(self, value):

75 if value is None and not self.__column.allow_none:

76 raise ValueError("'None' is invalid for column '" +

77 self.__column.name + "'")

78 if not self.__column.mutable:

79 raise AttributeError("Column '" + self.__column.name + "' is not" +

80 " mutable")

81 if self.__column.validate(value):

82 self.__value = value

83 else:

84 raise ValueError("'" + value + "' is not valid for column '" +

85 self.__column.name + "'")

86

87class Column(object):

88 """

89 Column descriptor for a column

90 """

91 def __init__(self, name, default=None, allow_none=False, mutable=True):

92 """

93 Initializes a column

94

95 name: Name of the column this maps to

96 default: Default value

97 allow_none: Whether none (db null) values are allowed

98 mutable: Whether this can be mutated by a setter

99 """

100 self.__name = name

101 self.__allow_none = allow_none

102 self.__mutable = mutable

103 self.__default = default

104

105 def validate(self, value):

106 """

107 In a child class, this will validate values being set

108 """

109 raise NotImplementedError

110

111 @property

112 def name(self):

113 return self.__name

114

115 @property

116 def allow_none(self):

117 return self.__allow_none

118

119 @property

120 def mutable(self):

121 return self.__mutable

122

123 @property

124 def default(self):

125 return self.__default

126

127 def __get__(self, owner, ownertype=None):

128 """

129 Gets the value for this column for the passed owner

130 """

131 if owner is None:

132 return self

133 if not isinstance(owner, ColumnSet):

134 raise TypeError("Columns are only allowed on ColumnSets")

135 return owner.get_column(self.name).value

136

137 def __set__(self, owner, value):

138 """

139 Sets the value for this column for the passed owner

140 """

141 if not isinstance(owner, ColumnSet):

142 raise TypeError("Columns are only allowed on ColumnSets")

143 owner.get_column(self.name).value = value

144

145class StringColumn(Column):

146 def validate(self, value):

147 if value is None and self.allow_none:

148 print "nonevalue"

149 return True

150 if isinstance(value, basestring):

151 print "isstr"

152 return True

153 print "not string", value, type(value)

154 return False

155

156class IntColumn(Column):

157 def validate(self, value):

158 if value is None and self.allow_none:

159 return True

160 if isinstance(value, int) or isinstance(value, long):

161 return True

162 return False

163

164class PasswordColumn(Column):

165 def __init__(self, name, salt_function, default=None, allow_none=False,

166 mutable=True):

167 """

168 Create a new password column which uses the specified salt function

169

170 salt_function: a function(self, value) which returns the salted string

171 """

172 Column.__init__(self, name, default, allow_none, mutable)

173 self.__salt_function = salt_function

174 def validate(self, value):

175 return True

176 def __set__(self, owner, value):

177 salted = self.__salt_function(owner, value)

178 super(PasswordColumn, self).__set__(owner, salted)

The Column class describes the column and is implemented as a descriptor. Each ColumnSet instance contains multiple columns and holds ColumnInstance objects which hold the individual column per-object properties, such as the value and whether it has been mutated or not. Each column type has a validation function to help screen invalid data from the columns. When a ColumnSet is initiated, it scans itself for columns and at that moment creates its ColumnInstances.

Generation of SQL using logical predicates

The next thing I had to create was the database querying structure. I decided that rather than actually using the ColumnInstance or Column objects, I would use a go-between object that can be assigned a "prefix". A common thing to do in SQL queries is to rename the tables in the query so that you can reference the same table multiple times or use different tables with the same column names. So, for example if I had a table called posts and I also had a table called users and they both shared a column called 'last_update', I could assign a prefix 'p' to the post columns and a prefix 'u' to the user columns so that the final column name would be 'p.last_update' and 'u.last_update' for posts and users respectively.

Another thing I wanted to do was avoid the usage of SQL in constructing my queries. This is similar to the way that LINQ works for C#: A predicate is specified and later translated into an SQL query or a series of operations in memory depending on what is going on. So, in Python one of my queries looks like so:

1class Table(ColumnSet):

2 some_column = StringColumn("column_1", "")

3 another = IntColumn("column_2", 0)

4a_variable = 5

5columns = Table.get_columns('x') # columns with a prefix 'x'

6query = DbQuery() # This base class just makes a where statement

7query.where((columns.some_column == "4") & (columns.another > a_variable)

8print query.sql

This would print out a tuple (" WHERE x.column_1 = %s AND x.column_2 > %s", ["4", 5]). So, how does this work? I used operator overloading to create DbQueryExpression objects. The code is like so:

1class DbQueryExpression(object):

2 """

3 Query expression created from columns, literals, and operators

4 """

5 def __and__(self, other):

6 return DbQueryConjunction(self, other)

7 def __or__(self, other):

8 return DbQueryDisjunction(self, other)

9

10 def __str__(self):

11 raise NotImplementedError

12 @property

13 def arguments(self):

14 raise NotImplementedError

15

16class DbQueryConjunction(DbQueryExpression):

17 """

18 Query expression joining together a left and right expression with an

19 AND statement

20 """

21 def __init__(self, l, r):

22 DbQueryExpression.__ini__(self)

23 self.l = l

24 self.r = r

25 def __str__(self):

26 return str(self.l) + " AND " + str(self.r)

27 @property

28 def arguments(self):

29 return self.l.arguments + self.r.arguments

30

31class DbQueryDisjunction(DbQueryExpression):

32 """

33 Query expression joining together a left and right expression with an

34 OR statement

35 """

36 def __init__(self, l, r):

37 DbQueryExpression.__init__(self)

38 self.l = l

39 self.r = r

40 def __str__(self):

41 return str(self.r) + " OR " + str(self.r)

42 @property

43 def arguments(self):

44 return self.l.arguments + self.r.arguments

45

46class DbQueryColumnComparison(DbQueryExpression):

47 """

48 Query expression comparing a combination of a column and/or a value

49 """

50 def __init__(self, l, op, r):

51 DbQueryExpression.__init__(self)

52 self.l = l

53 self.op = op

54 self.r = r

55 def __str__(self):

56 output = ""

57 if isinstance(self.l, DbQueryColumn):

58 prefix = self.l.prefix

59 if prefix is not None:

60 output += prefix + "."

61 output += self.l.name

62 elif self.l is None:

63 output += "NULL"

64 else:

65 output += "%s"

66 output += self.op

67 if isinstance(self.r, DbQueryColumn):

68 prefix = self.r.prefix

69 if prefix is not None:

70 output += prefix + "."

71 output += self.r.name

72 elif self.r is None:

73 output += "NULL"

74 else:

75 output += "%s"

76 return output

77 @property

78 def arguments(self):

79 output = []

80 if not isinstance(self.l, DbQueryColumn) and self.l is not None:

81 output.append(self.l)

82 if not isinstance(self.r, DbQueryColumn) and self.r is not None:

83 output.append(self.r)

84 return output

85

86class DbQueryColumnSet(object):

87 """

88 Represents a set of columns attached to a specific DbOject type. This

89 object dynamically builds itself based on a passed type. The columns

90 attached to this set may be used in DbQueries

91 """

92 def __init__(self, dbo_type, prefix):

93 d = dbo_type.__dict__

94 self.__columns = {}

95 for attr in d:

96 obj = d[attr]

97 if isinstance(obj, Column):

98 column = DbQueryColumn(dbo_type, prefix, obj.name)

99 setattr(self, attr, column)

100 self.__columns[obj.name] = column

101 def __len__(self):

102 return len(self.__columns)

103 def __getitem__(self, key):

104 return self.__columns[key]

105 def __iter__(self):

106 return iter(self.__columns)

107

108class DbQueryColumn(object):

109 """

110 Represents a Column object used in a DbQuery

111 """

112 def __init__(self, dbo_type, prefix, column_name):

113 self.dbo_type = dbo_type

114 self.name = column_name

115 self.prefix = prefix

116

117 def __lt__(self, other):

118 return DbQueryColumnComparison(self, "<", other)

119 def __le__(self, other):

120 return DbQueryColumnComparison(self, "<=", other)

121 def __eq__(self, other):

122 op = "="

123 if other is None:

124 op = " IS "

125 return DbQueryColumnComparison(self, op, other)

126 def __ne__(self, other):

127 op = "!="

128 if other is None:

129 op = " IS NOT "

130 return DbQueryColumnComparison(self, op, other)

131 def __gt__(self, other):

132 return DbQueryColumnComparison(self, ">", other)

133 def __ge__(self, other):

134 return DbQueryColumnComparison(self, ">=", other)

The __str__ function and arguments property return recursively generated expressions using the column prefixes (in the case of __str__) and the arguments (in the case of arguments). As can be seen, this supports parameterization of queries. To be honest, this part was the most fun since I was surprised it was so easy to make predicate expressions using a minimum of classes. One thing that I didn't like, however, was the fact that the boolean and/or operators cannot be overloaded. For that reason I had to use the bitwise operators, so the expressions aren't entirely correct when being read.

This DbQueryExpression is fed into my DbQuery object which actually does the translation to SQL. In the example above, we saw that I just passed a logical argument into my where function. This actually was a DbQueryExpression since my overloaded operators create DbQueryExpression objects when they are compared. The DbColumnSet object is an dynamically generated object containing the go-between column objects which is created from a DbObject. We will discuss the DbObject a little further down

The DbQuery objects are implemented as follows:

1class DbQueryError(Exception):

2 """

3 Raised when there is an error constructing a query

4 """

5 def __init__(self, msg):

6 self.message = msg

7 def __str__(self):

8 return self.message

9

10class DbQuery(object):

11 """

12 Represents a base SQL Query to a database based upon some DbObjects

13

14 All of the methods implemented here are valid on select, update, and

15 delete statements.

16 """

17 def __init__(self, execute_filter=None):

18 """

19 callback: Function to call when the DbQuery is executed

20 """

21 self.__where = []

22 self.__limit = None

23 self.__orderby = []

24 self.__execute_filter = execute_filter

25 def where(self, expression):

26 """Specify an expression to append to the WHERE clause"""

27 self.__where.append(expression)

28 def limit(self, value=None):

29 """Specify the limit to the query"""

30 self.__limit = value

31 @property

32 def sql(self):

33 query = ""

34 args = []

35 if len(self.__where) > 0:

36 where = self.__where[0]

37 for clause in self.__where[1:]:

38 where = where & clause

39 args = where.arguments

40 query += " WHERE " + str(where)

41 if self.__limit is not None:

42 query += " LIMIT " + self.__limit

43 return query,args

44 def execute(self, cur):

45 """

46 Executes this query on the passed cursor and returns either the result

47 of the filter function or the cursor if there is no filter function.

48 """

49 query = self.sql

50 cur.execute(query[0], query[1])

51 if self.__execute_filter:

52 return self.__execute_filter(self, cur)

53 else:

54 return cur

55

56class DbSelectQuery(DbQuery):

57 """

58 Creates a select query to a database based upon DbObjects

59 """

60 def __init__(self, execute_filter=None):

61 DbQuery.__init__(self, execute_filter)

62 self.__select = []

63 self.__froms = []

64 self.__joins = []

65 self.__orderby = []

66 def select(self, *columns):

67 """Specify one or more columns to select"""

68 self.__select += columns

69 def from_table(self, dbo_type, prefix):

70 """Specify a table to select from"""

71 self.__froms.append((dbo_type, prefix))

72 def join(self, dbo_type, prefix, on):

73 """Specify a table to join to"""

74 self.__joins.append((dbo_type, prefix, on))

75 def orderby(self, *columns):

76 """Specify one or more columns to order by"""

77 self.__orderby += columns

78 @property

79 def sql(self):

80 query = "SELECT "

81 args = []

82 if len(self.__select) == 0:

83 raise DbQueryError("No selection in DbSelectQuery")

84 query += ','.join([col.prefix + "." +

85 col.name for col in self.__select])

86 if len(self.__froms) == 0:

87 raise DbQueryError("No FROM clause in DbSelectQuery")

88 for table in self.__froms:

89 query += " FROM " + table[0].dbo_tablename + " " + table[1]

90 if len(self.__joins) > 0:

91 for join in self.__joins:

92 query += " JOIN " + join[0].dbo_tablename + " " + join[1] +

93 " ON " + str(join[2])

94 query_parent = super(DbSelectQuery, self).sql

95 query += query_parent[0]

96 args += query_parent[1]

97 if len(self.__orderby) > 0:

98 query += " ORDER BY " +

99 ','.join([col.prefix + "." +

100 col.name for col in self.__orderby])

101 return query,args

102

103class DbInsertQuery(DbQuery):

104 """

105 Creates an insert query to a database based upon DbObjects. This does not

106 include any where or limit expressions

107 """

108 def __init__(self, dbo_type, prefix, execute_filter=None):

109 DbQuery.__init__(self, execute_filter)

110 self.table = (dbo_type, prefix)

111 self.__values = []

112 def value(self, column, value):

113 self.__values.append((column, value))

114 @property

115 def sql(self):

116 if len(self.__values) == 0:

117 raise DbQueryError("No values in insert")

118 tablename = self.table[0].dbo_tablename

119 query = "INSERT INTO {table} (".format(table=tablename)

120 args = [val[1] for val in self.__values

121 if val[0].prefix == self.table[1]]

122 query += ",".join([val[0].name for val in self.__values

123 if val[0].prefix == self.table[1]])

124 query += ") VALUES ("

125 query += ",".join(["%s" for x in args])

126 query += ")"

127 return query,args

128

129class DbUpdateQuery(DbQuery):

130 """

131 Creates an update query to a database based upon DbObjects

132 """

133 def __init__(self, dbo_type, prefix, execute_filter=None):

134 """

135 Initialize the update query

136

137 dbo_type: table type to be updating

138 prefix: Prefix the columns are known under

139 """

140 DbQuery.__init__(self, execute_filter)

141 self.table = (dbo_type, prefix)

142 self.__updates = []

143 def update(self, left, right):

144 self.__updates.append((left, right))

145 @property

146 def sql(self):

147 if len(self.__updates) == 0:

148 raise DbQueryError("No update in DbUpdateQuery")

149 query = "UPDATE " + self.table[0].dbo_tablename + " " + self.table[1]

150 args = []

151 query += " SET "

152 for update in self.__updates:

153 if isinstance(update[0], DbQueryColumn):

154 query += update[0].prefix + "." + update[0].name

155 else:

156 query += "%s"

157 args.append(update[0])

158 query += "="

159 if isinstance(update[1], DbQueryColumn):

160 query += update[1].prefix + "." + update[1].name

161 else:

162 query += "%s"

163 args.append(update[1])

164 query_parent = super(DbUpdateQuery, self).sql

165 query += query_parent[0]

166 args += query_parent[1]

167 return query, args

168

169class DbDeleteQuery(DbQuery):

170 """

171 Creates a delete query for a database based on a DbObject

172 """

173 def __init__(self, dbo_type, prefix, execute_filter=None):

174 DbQuery.__init__(self, execute_filter)

175 self.table = (dbo_type, prefix)

176 @property

177 def sql(self):

178 query = "DELETE FROM " + self.table[0].dbo_tablename + " " +

179 self.table[1]

180 args = []

181 query_parent = super(DbDeleteQuery, self).sql

182 query += query_parent[0]

183 args += query_parent[1]

184 return query, args

Each of the SELECT, INSERT, UPDATE, and DELETE query types inherits from a base DbQuery which does execution and such. I decided to make the DbQuery object take a PEP 249-style cursor object and execute the query itself. My hope is that this will make this a little more portable since, to my knowledge, I didn't make the queries have any MySQL-specific constructions.

The different query types each implement a variety of statements corresponding to different parts of an SQL query: where(), limit(), orderby(), select(), from_table(), etc. These each take in either a DbQueryColumn (such as is the case with where(), orderby(), select(), etc) or a string to be appended to the query, such as is the case with limit(). I could easily have made limit take in two integers as well, but I was kind of rushing through because I wanted to see if this would even work. The query is built by creating the query object for the basic query type that is desired and then calling its member functions to add things on to the query.

Executing the queries can cause a callback "filter" function to be called which takes in the query and the cursor as arguments. I use this function to create new objects from the data or to update an object. It could probably be used for more clever things as well, but those two cases were my original intent in creating it. If no filter is specified, then the cursor is returned.

Table and row objects

At the highest level of this hierarchy is the DbObject. The DbObject definition actually represents a table in the database with a name and a single primary key column. Each instance represents a row. DbObjects also implement the methods for selecting records of their type and also updating themselves when they are changed. They inherit change tracking from the ColumnSet and use DbQueries to accomplish their querying goals. The code is as follows:

1class DbObject(ColumnSet):

2 """

3 A DbObject is a set of columns linked to a table in the database. This is

4 synonomous to a row. The following class attributes must be set:

5

6 dbo_tablename : string table name

7 primary_key : Column for the primary key

8 """

9 def __init__(self, **cols):

10 ColumnSet.__init__(self)

11 for name in cols:

12 c = self.get_column(name)

13 c.update(cols[name])

14

15 @classmethod

16 def get_query_columns(self, prefix):

17 return DbQueryColumnSet(self, prefix)

18

19 @classmethod

20 def select(self, prefix):

21 """

22 Returns a DbSelectQuery set up for this DbObject

23 """

24 columns = self.get_query_columns(prefix)

25 def execute(query, cur):

26 output = []

27 block = cur.fetchmany()

28 while len(block) > 0:

29 for row in block:

30 values = {}

31 i = 0

32 for name in columns:

33 values[name] = row[i]

34 i += 1

35 output.append(self(**values))

36 block = cur.fetchmany()

37 return output

38 query = DbSelectQuery(execute)

39 query.select(*[columns[name] for name in columns])

40 query.from_table(self, prefix)

41 return query, columns

42

43 def get_primary_key_name(self):

44 return type(self).__dict__['primary_key'].name

45

46 def save(self, cur):

47 """

48 Saves any changes to this object to the database

49 """

50 if self.primary_key is None:

51 # we need to be saved

52 columns = self.get_query_columns('x')

53 def execute(query, cur):

54 self.get_column(self.get_primary_key_name()

55 ).update(cur.lastrowid)

56 selection = []

57 for name in columns:

58 if name == self.get_primary_key_name():

59 continue #we have no need to update the primary key

60 column_instance = self.get_column(name)

61 if not column_instance.column.mutable:

62 selection.append(columns[name])

63 if len(selection) != 0:

64 # we get to select to get additional computed values

65 def execute2(query, cur):

66 row = cur.fetchone()

67 index = 0

68 for s in selection:

69 self.get_column(s.name).update(row[index])

70 index += 1

71 return True

72 query = DbSelectQuery(execute2)

73 query.select(*selection)

74 query.from_table(type(self), 'x')

75 query.where(columns[self.get_primary_key_name()] ==

76 self.get_column(self.get_primary_key_name()

77 ).value)

78 return query.execute(cur)

79 return True

80 query = DbInsertQuery(type(self), 'x', execute)

81 for name in columns:

82 column_instance = self.get_column(name)

83 if not column_instance.column.mutable:

84 continue

85 query.value(columns[name], column_instance.value)

86 print query.sql

87 return query.execute(cur)

88 else:

89 # we have been modified

90 modified = self.mutated

91 if len(modified) == 0:

92 return True

93 columns = self.get_query_columns('x')

94 def execute(query, cur):

95 for mod in modified:

96 mod.update(mod.value)

97 return True

98 query = DbUpdateQuery(type(self), 'x', execute)

99 for mod in modified:

100 query.update(columns[mod.column.name], mod.value)

101 query.where(columns[self.get_primary_key_name()] == self.primary_key)

102 return query.execute(cur)

DbObjects require that the inheriting classes define two properties: dbo_tablename and primary_key. dbo_tablename is just a string giving the name of the table in the database and primary_key is a Column that will be used as the primary key.

To select records from the database, the select() function can be called from the class. This sets up a DbSelectQuery which will return an array of the DbObject that it is called for when the query is executed.

One fallacy of this structure is that at the moment it assumes that the primary key won't be None if it has been set. In other words, the way I did it right now does not allow for null primary keys. The reason it does this is because it says that if the primary key hasn't been set, it needs to generate a DbInsertQuery for the object when save() is called instead of a DbUpdateQuery. Both insert and update queries do not include every field. Immutable fields are always excluded and then later selected or inferred from the cursor object.

256Mb doesn't do what it used to...

So, this last week I got an email from rackspace saying that my server was thrashing the hard drives and lowering performance of everyone else on that same machine. In consequence, they had rebooted my server for me.

I made a few mistakes in the setup of this first iteration of my server: I didn't restart it after kernel updates, I ran folding@home on it while running nodejs, and I didn't have backups turned on. I had it running for well over 200 days without a reboot while there had been a dozen or so kernel updates. When they hard rebooted my server, it wouldn't respond at all to pings, ssh, or otherwise. In fact, it behaved like the firewalls were shut (hanging on the "waiting" step rather than saying "connection refused"). I ended up having to go into their handy rescue mode and copy out all the files. I only copied my www directory and the mysql binary table files, but as you can see, I was able to restore the server from those.

This gave me an excellent opportunity to actually set up my server correctly. I no longer have to be root to edit my website files (yay!), I have virtual hosts set up in a fashion that makes sense and actually works, and overall performance seems to be improved. From now on, I will be doing the updates less frequently and when I do I will be rebooting the machine. That should fix the problem with breaking everything if a hard reboot happens.

I do pay for the hosting for this, 1.5 cents per hour per 256Mb of RAM with extra for bandwidth. I only have 256Mb and since I don't make any profit off this server whatsoever at the moment, I plan on keeping it that way for now. Considering that back in the day, 256Mb was a ton of memory, it clearly no longer suffices for running too much on my server (httpd + mysql + nodejs + folding@home = crash and burn).

Multiprocessing with the WebSocketServer

After spending way to much time thinking about exactly how to do it, I have got multiprocessing working with the WebSocketServer.

I have learned some interesting things about multiprocessing with Python:

- Basically no complicated objects can be sent over queues.

- Since pickle is used to send the objects, the object must also be serializable

- Methods (i.e. callbacks) cannot be sent either

- Processes can't have new queues added after the initial creation since they aren't picklable, so when the process is created, it has to be given its queues and that's all the queues its ever going to get

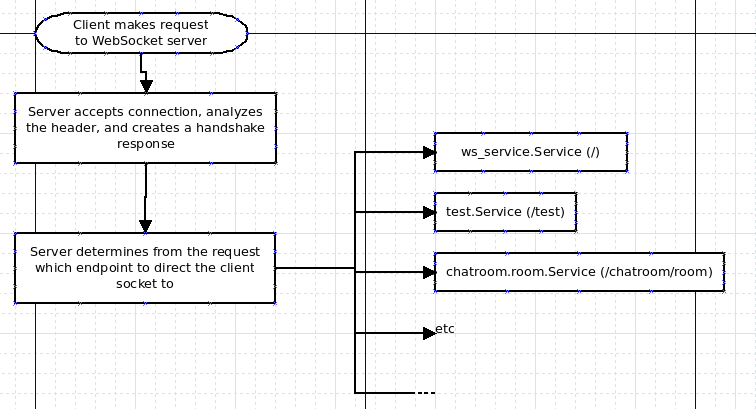

So the new model for operation is as follows:

Sadly, because of the lack of ability to share methods between processes, there is a lot of polling going on here. A lot. The service has to poll its queues to see if any clients or packets have come in, the server has to poll all the client socket queues to see if anything came in from them and all the service queues to see if the services want to send anything to the clients, etc. I guess it was a sacrifice that had to be made to be able to use multiprocessing. The advantage gained now is that services have access to their own interpreter and so they aren't all forced to share the same CPU. I would imagine this would improve performance with more intensive servers, but the bottleneck will still be with the actual sending and receiving to the clients since every client on the server still has to share the same thread.

So now, the plan to proceed is as follows:

- Figure out a way to do a pre-forked server so that client polling can be done by more than one process

- Extend the built in classes a bit to simplify service creation and to make it a bit more intuitive.

As always, the source is available at https://github.com/kcuzner/python-websocket-server

Making a WebSocket server

For the past few weeks I have been experimenting a bit with HTML5 WebSockets. I don't normally focus only on software when building something, but this has been an interesting project and has allowed me to learn a lot more about the nitty gritty of sockets and such. I have created a github repository for it (it's my first time using git and I'm loving it) which is here: https://github.com/kcuzner/python-websocket-server

The server I have runs on a port which is considered a dedicated port for WebSocket-based services. The server is written in python and defines a few base classes for implementing a service. The basic structure is as follows:

Each service has its own thread and inherits from a base class which is a thread plus a queue for accepting new clients. The clients are a socket object returned by socket.accept which are wrapped in a class that allows for communication to the socket via queues. The actual communication to sockets is managed by a separate thread that handles all the encoding and decoding to websocket frames. Since adding a client doesn't produce much overhead, this structure potentially could be expanded very easily to handle many many clients.

A few things I plan on adding to the server eventually are:

- Using processes instead of threads for the services. Due to the global interpreter lock, if this is run using CPython (which is what most people use as far as I know and also what comes installed by default on many systems) all the threads will be locked to use the same CPU since the python interpreter can't be used by more than one thread at once (however, it can for processes). The difficult part of all this is that it is hard to pass actual objects between processes and I have to do some serious re-structuring for the code to work without needing to pass objects (such as sockets) to services.

- Creating a better structure for web services (currently only two base classes are really available) including a generalized database binding that is thread safe so that a service could potentially split into many threads while not overwhelming the database connection.

Currently, the repository includes a demo chatroom service for which I should have the client side application done soon and uploaded. Currently it supports multiple chatrooms and multiple users, but there is no authentication really and there are a few features I would like to add (such as being able to see who is in the chatroom).

Not so temporary...

Well I have decided to place my actual website on this server. My home server has failed due to router issues and I will probably be redesigning the entire website in the near future anyway since it uses outdated layout techniques. This server is basically the smith-marsden.org website that I work on for my dad's side of the family. I plan on downloading the contents of my server to here over the weekend so that I can try to clean things up a bit and hopefully get this site back on the map.

I will probably post progress on the new smith-marsden site here since that is currently my main development challenge.

Additional features of the new smith-marsden site:

- Ability to invite other users given to everyone

- RSS feeds on letter submissions

- More information stored so that it is all in one place that is private rather than facebook or somethwere else which could be quite public

- New state of the art layout techniques enhance the website

- AJAX allows pages to submit forms without reloading, making page load times faster and looks really cool.

- The entire thing is made using CakePHP, a robust framework.

- More to come as I think of them and make them...

Recent posts

Search through tags with mini.pick in neovimWriting reusable USB device descriptors (and other constant data) with C++ constexpr

Using "access" types and "new" in VHDL

A New Blog

A good workflow and build system with OpenSCAD and Makefiles