Kevin Cuzner's Personal Blog

Electronics, Embedded Systems, and Software are my breakfast, lunch, and dinner.

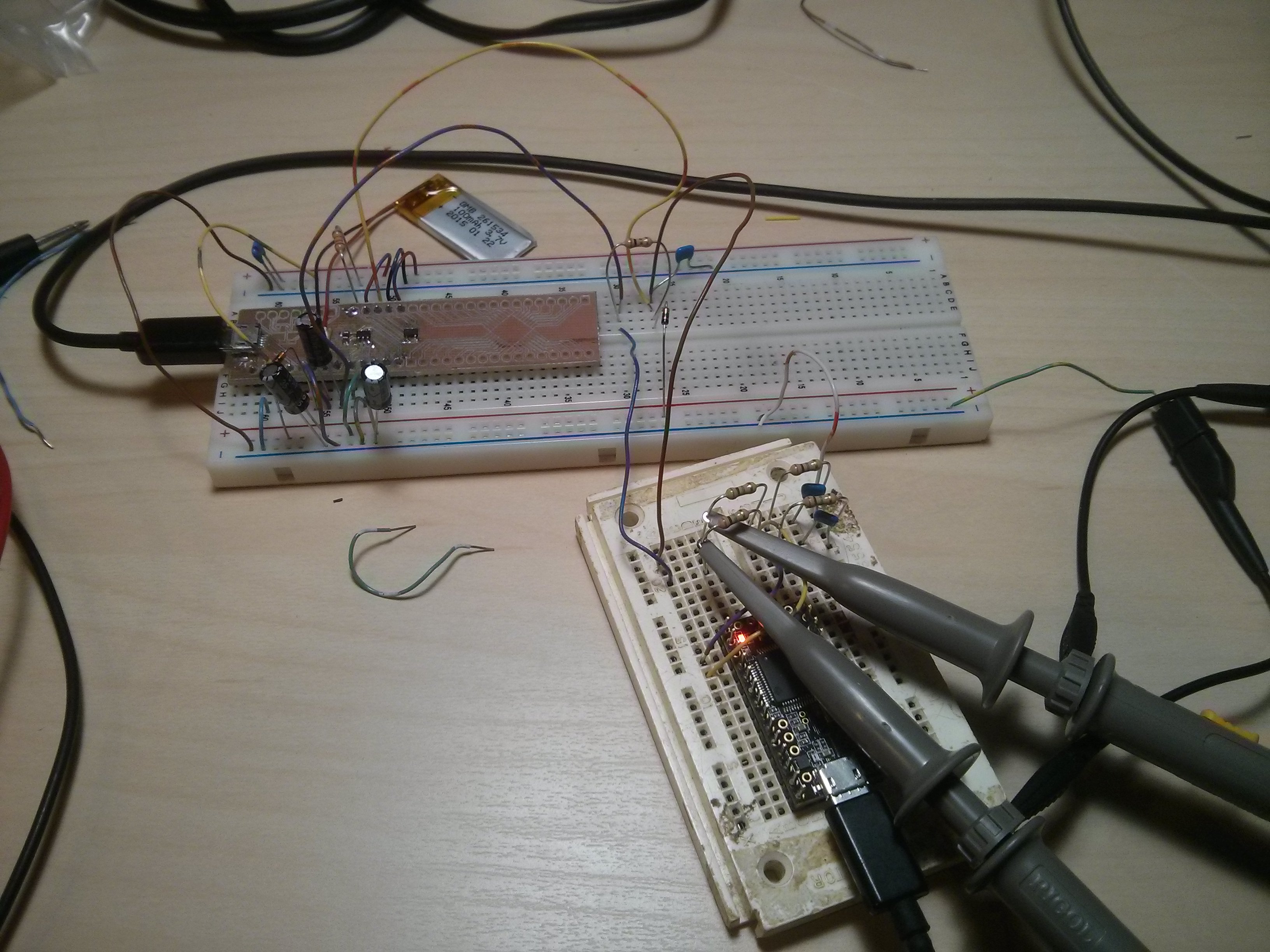

Quick-n-dirty data acquisition with a Teensy 3.1

Jul 05, 2016

The Problem

I am working on a project that involves a Li-Ion battery charger. I've never built one of these circuits before and I wanted to test the battery over its entire charge-discharge cycle to make sure it wasn't going to burst into flame because I set some resistor wrong. The battery itself is very tiny (100mAH, 2.5mm thick) and is going to be powering an extremely low-power circuit, hopefully over the course of many weeks between charges.

After about 2 days of taking meter measurements every 6 hours or so to see what the voltage level had dropped to, I decided to try to automate this process. I had my trusty Teensy 3.1 lying around, so I thought that it should be pretty simple to turn it into a simple data logger, measuring the voltage at a very slow rate (maybe 1 measurement per 5 seconds). Thus was born the EZDAQ.

All code for this project is located in the repository at `https://github.com/kcuzner/ezdaq <https://github.com/kcuzner/ezdaq>`__

Setting up the Teensy 3.1 ADC

I've never used the ADC before on the Teensy 3.1. I don't use the Teensy Cores HAL/Arduino Library because I find it more fun to twiddle the bits and write the makefiles myself. Of course, this also means that I don't always get a project working within 30 minutes.

The ADC on the Teensy 3.1 (or the Kinetis MK20DX256) is capable of doing 16-bit conversions at 400-ish ksps. It is also quite complex and can do conversions in many different ways. It is one of the larger and more configurable peripherals on the device, probably rivaled only by the USB module. The module does not come pre-calibrated and requires a calibration cycle to be performed before its accuracy will match that specified in the datasheet. My initialization code is as follows:

1//Enable ADC0 module

2SIM_SCGC6 |= SIM_SCGC6_ADC0_MASK;

3

4//Set up conversion precision and clock speed for calibration

5ADC0_CFG1 = ADC_CFG1_MODE(0x1) | ADC_CFG1_ADIV(0x1) | ADC_CFG1_ADICLK(0x3); //12 bit conversion, adc async clock, div by 2 (<3MHz)

6ADC0_CFG2 = ADC_CFG2_ADACKEN_MASK; //enable async clock

7

8//Enable hardware averaging and set up for calibration

9ADC0_SC3 = ADC_SC3_CAL_MASK | ADC_SC3_AVGS(0x3);

10while (ADC0_SC3 & ADC_SC3_CAL_MASK) { }

11if (ADC0_SC3 & ADC_SC3_CALF_MASK) //calibration failed. Quit now while we're ahead.

12 return;

13temp = ADC0_CLP0 + ADC0_CLP1 + ADC0_CLP2 + ADC0_CLP3 + ADC0_CLP4 + ADC0_CLPS;

14temp /= 2;

15temp |= 0x1000;

16ADC0_PG = temp;

17temp = ADC0_CLM0 + ADC0_CLM1 + ADC0_CLM2 + ADC0_CLM3 + ADC0_CLM4 + ADC0_CLMS;

18temp /= 2;

19temp |= 0x1000;

20ADC0_MG = temp;

21

22//Set clock speed for measurements (no division)

23ADC0_CFG1 = ADC_CFG1_MODE(0x1) | ADC_CFG1_ADICLK(0x3); //12 bit conversion, adc async clock, no divide

Following the recommendations in the datasheet, I selected a clock that would bring the ADC clock speed down to <4MHz and turned on hardware averaging before starting the calibration. The calibration is initiated by setting a flag in ADC0_SC3 and when completed, the calibration results will be found in the several ADC0_CL* registers. I'm not 100% certain how this calibration works, but I believe what it is doing is computing some values which will trim some value in the SAR logic (probably something in the internal DAC) in order to shift the converted values into spec.

One thing to note is that I did not end up using the 16-bit conversion capability. I was a little rushed and was put off by the fact that I could not get it to use the full 0-65535 dynamic range of a 16-bit result variable. It was more like 0-10000. This made figuring out my "volts-per-value" value a little difficult. However, the 12-bit mode gave me 0-4095 with no problems whatsoever. Perhaps I'll read a little further and figure out what is wrong with the way I was doing the 16-bit conversions, but for now 12 bits is more than sufficient. I'm just measuring some voltages.

Since I planned to measure the voltages coming off a Li-Ion battery, I needed to make sure I could handle the range of 3.0V-4.2V. Most of this is outside the Teensy's ADC range (max is 3.3V), so I had to make myself a resistor divider attenuator (with a parallel capacitor for added stability). It might have been better to use some sort of active circuit, but this is supposed to be a quick and dirty DAQ. I'll talk a little more about handling issues spawning from the use of this resistor divider in the section about the host software.

Quick and dirty USB device-side driver

For this project I used my device-side USB driver software that I wrote in this project. Since we are gathering data quite slowly, I figured that a simple control transfer should be enough to handle the requisite bandwidth.

1static uint8_t tx_buffer[256];

2

3/**

4 * Endpoint 0 setup handler

5 */

6static void usb_endp0_handle_setup(setup_t* packet)

7{

8 const descriptor_entry_t* entry;

9 const uint8_t* data = NULL;

10 uint8_t data_length = 0;

11 uint32_t size = 0;

12 uint16_t *arryBuf = (uint16_t*)tx_buffer;

13 uint8_t i = 0;

14

15 switch(packet->wRequestAndType)

16 {

17...USB Protocol Stuff...

18 case 0x01c0: //get adc channel value (wIndex)

19 *((uint16_t*)tx_buffer) = adc_get_value(packet->wIndex);

20 data = tx_buffer;

21 data_length = 2;

22 break;

23 default:

24 goto stall;

25 }

26

27 //if we are sent here, we need to send some data

28 send:

29...Send Logic...

30

31 //if we make it here, we are not able to send data and have stalled

32 stall:

33...Stall logic...

34}

I added a control request (0x01) which uses the wIndex (not to be confused with the cleaning product) value to select a channel to read. The host software can now issue a vendor control request 0x01, setting the wIndex value accordingly, and get the raw value last read from a particular analog channel. In order to keep things easy, I labeled the analog channels using the same format as the standard Teensy 3.1 layout. Thus, wIndex 0 corresponds to A0, wIndex 1 corresponds to A1, and so forth. The adc_get_value function reads the last read ADC value for a particular channel. Sampling is done by the ADC continuously, so the USB read doesn't initiate a conversion or anything like that. It just reads what happened on the channel during the most recent conversion.

Host software

Since libusb is easy to use with Python, via PyUSB, I decided to write out the whole thing in Python. Originally I planned on some sort of fancy gui until I realized that it would far simpler just to output a CSV and use MATLAB or Excel to process the data. The software is simple enough that I can just put the entire thing here:

1#!/usr/bin/env python3

2

3# Python Host for EZDAQ

4# Kevin Cuzner

5#

6# Requires PyUSB

7

8import usb.core, usb.util

9import argparse, time, struct

10

11idVendor = 0x16c0

12idProduct = 0x05dc

13sManufacturer = 'kevincuzner.com'

14sProduct = 'EZDAQ'

15

16VOLTS_PER = 3.3/4096 # 3.3V reference is being used

17

18def find_device():

19 for dev in usb.core.find(find_all=True, idVendor=idVendor, idProduct=idProduct):

20 if usb.util.get_string(dev, dev.iManufacturer) == sManufacturer and \

21 usb.util.get_string(dev, dev.iProduct) == sProduct:

22 return dev

23

24def get_value(dev, channel):

25 rt = usb.util.build_request_type(usb.util.CTRL_IN, usb.util.CTRL_TYPE_VENDOR, usb.util.CTRL_RECIPIENT_DEVICE)

26 raw_data = dev.ctrl_transfer(rt, 0x01, wIndex=channel, data_or_wLength=256)

27 data = struct.unpack('H', raw_data)

28 return data[0] * VOLTS_PER;

29

30def get_values(dev, channels):

31 return [get_value(dev, ch) for ch in channels]

32

33def main():

34 # Parse arguments

35 parser = argparse.ArgumentParser(description='EZDAQ host software writing values to stdout in CSV format')

36 parser.add_argument('-t', '--time', help='Set time between samples', type=float, default=0.5)

37 parser.add_argument('-a', '--attenuation', help='Set channel attentuation level', type=float, nargs=2, default=[], action='append', metavar=('CHANNEL', 'ATTENUATION'))

38 parser.add_argument('channels', help='Channel number to record', type=int, nargs='+', choices=range(0, 10))

39 args = parser.parse_args()

40

41 # Set up attentuation dictionary

42 att = args.attenuation if len(args.attenuation) else [[ch, 1] for ch in args.channels]

43 att = dict([(l[0], l[1]) for l in att])

44 for ch in args.channels:

45 if ch not in att:

46 att[ch] = 1

47

48 # Perform data logging

49 dev = find_device()

50 if dev is None:

51 raise ValueError('No EZDAQ Found')

52 dev.set_configuration()

53 print(','.join(['Time']+['Channel ' + str(ch) for ch in args.channels]))

54 while True:

55 values = get_values(dev, args.channels)

56 print(','.join([str(time.time())] + [str(v[1] * (1/att[v[0]])) for v in zip(args.channels, values)]))

57 time.sleep(args.time)

58

59if __name__ == '__main__':

60 main()

Basically, I just use the argparse module to take some command line inputs, find the device using PyUSB, and spit out the requested channel values in a CSV format to stdout every so often.

In addition to simply displaying the data, the program also processes the raw ADC values into some useful voltage values. I contemplated doing this on the device, but it was simpler to configure if I didn't have to reflash it every time I wanted to make an adjustment. One thing this lets me do is a sort of calibration using the "attenuation" values that I put into the host. The idea with these values is to compensate for a voltage divider in front of the analog input in order so that I can measure higher voltages, even though the Teensy 3.1 only supports voltages up to 3.3V.

For example, if I plugged my 50%-ish resistor divider on channel A0 into 3.3V, I would run the following command:

1$ ./ezdaq 0

2Time,Channel 0

31467771464.9665403,1.7990478515625

4...

We now have 1.799 for the "voltage" seen at the pin with an attenuation factor of 1. If we divide 1.799 by 3.3 we get 0.545 for our attenuation value. Now we run the following to get our newly calibrated value:

1$ ./ezdaq -a 0 0.545 0

2Time,Channel 0

31467771571.2447994,3.301005232

4...

This process highlights an issue with using standard resistors. Unless the resistors are precision resistors, the values will not ever really match up very well. I used 4 1meg resistors to make two voltage dividers. One of them had about a 46% division and the other was close to 48%. Sure, those seem close, but in this circuit I needed to be accurate to at least 50mV. The difference between 46% and 48% is enough to throw this off. So, when doing something like this with trying to derive an input voltage after using an imprecise voltage divider, some form of calibration is definitely needed.

Conclusion

After hooking everything up and getting everything to run, it was fairly simple for me to take some two-channel measurements:

1$ ./ezdaq -t 5 -a 0 0.465 -a 1 0.477 0 1 > ~/Projects/AVR/the-project/test/charge.csv

This will dump the output of my program into the charge.csv file (which is measuring the charge cycle on the battery). I will get samples every 5 seconds. Later, I can use this data to make sure my circuit is working properly and observe its behavior over long periods of time. While crude, this quick and dirty DAQ solution works quite well for my purposes.

adc arm arm-programming daq hardware linux programming teensy usb

Recent posts

Search through tags with mini.pick in neovimWriting reusable USB device descriptors (and other constant data) with C++ constexpr

Using "access" types and "new" in VHDL

A New Blog

A good workflow and build system with OpenSCAD and Makefiles